“Vega” Instruction Set Architecture¶

Preface¶

About This Document¶

This document describes the environment, organization and program state of AMD GCN “VEGA” Generation devices. It details the instruction set and the microcode formats native to this family of processors that are accessible to programmers and compilers.

The document specifies the instructions (include the format of each type of instruction) and the relevant program state (including how the program state interacts with the instructions). Some instruction fields are mutually dependent; not all possible settings for all fields are legal. This document specifies the valid combinations.

The main purposes of this document are to:

Specify the language constructs and behavior, including the organization of each type of instruction in both text syntax and binary format.

Provide a reference of instruction operation that compiler writers can use to maximize performance of the processor.

Audience¶

This document is intended for programmers writing application and system software, including operating systems, compilers, loaders, linkers,device drivers, and system utilities. It assumes that programmers are writing compute-intensive parallel applications (streaming applications) and assumes an understanding of requisite programming practices.

Organization¶

Conventions¶

The following conventions are used in this document:

mono-spaced font |

A filename, file path or code. |

* |

Any number of alphanumeric characters in the name of a code format, parameter, or instruction. |

< > |

Angle brackets denote streams. |

[1,2) |

A range that includes the left-most value (in this case, 1), but excludes the right-most value (in this case, 2). |

[1,2] |

A range that includes both the left-most and right-most values. |

{x | y} |

One of the multiple options listed. In this case, X or Y. |

0.0 |

A single-precision (32-bit) floating-point value. |

1011b |

A binary value, in this example a 4-bit value. |

7:4 |

A bit range, from bit 7 to bit 4, inclusive. The high-order bit is shown first. |

italicized word or phrase |

The first use of a term or concept basic to the understanding of stream computing. |

Differences Between VEGA and Previous Devices¶

Summary of kernel instruction changes in Vega GPUs:

New packed 16-bit math instructions.

V_PK_MAD_I16 V_PK_MUL_LO_U16 V_PK_ADD_I16 V_PK_SUB_I16 V_PK_LSHLREV_B16 V_PK_LSHRREV_B16 V_PK_ASHRREV_I16 V_PK_MAX_I16 V_PK_MIN_I16 V_PK_MAD_U16 V_PK_ADD_U16 V_PK_SUB_U16 V_PK_MAX_U16 V_PK_MIN_U16 V_PK_FMA_F16 V_PK_ADD_F16 V_PK_MUL_F16 V_PK_MIN_F16 V_PK_MAX_F16 V_MAD_MIX_F32 V_MAD_MIXLO_F16 V_MAD_MIXHI_F16 S_PACK_{LL,LH,HH}_B16_B32

TMA and TBA registers are stored one per VM-ID, not per draw or dispatch.

Added Image operations support 16-bit address and data.

Added Global and Scratch memory read/write operations.

Also added Scratch load/store to scalar memory.

Added Scalar memory atomic instructions.

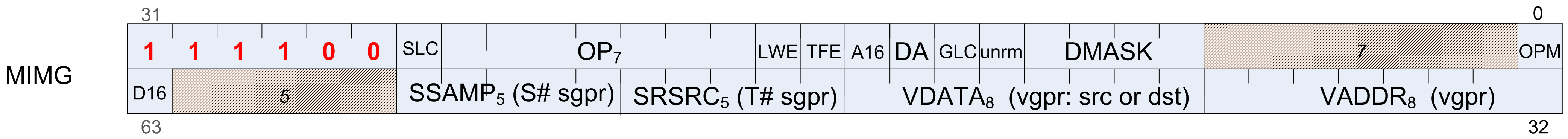

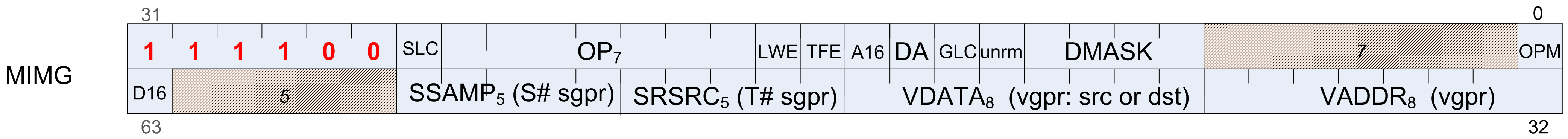

MIMG Microcode format: removed the R128 bit.

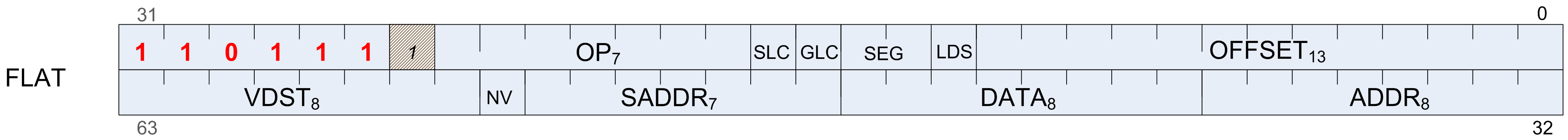

FLAT Microcode format: added an offset field.

Removed V_MOVEREL instructions.

Added control over arithmetic overflow for FP16 VALU operations.

Modified bit packing of surface descriptors and samplers:

T#: removed heap, elem_size, last_array, interlaced, uservm_mode bits.

V#: removed mtype.

S#: removed astc_hdr field.

Contact Information¶

For information concerning AMD Accelerated Parallel Processing developing, please see: http://developer.amd.com/ .

For information about developing with AMD Accelerated Parallel Processing, please see: http://developer.amd.com/amd-accelerated-parallel-processing-app-sdk/ .

We also have a growing community of AMD Accelerated Parallel Processing users. Come visit us at the AMD Accelerated Parallel Processing Developer Forum ( http://developer.amd.com/openclforum ) to find out what applications other users are trying on their AMD Accelerated Parallel Processing products.

Introduction¶

AMD GCN processors implement a parallel micro-architecture that provides an excellent platform not only for computer graphics applications but also for general-purpose data parallel applications. Any data-intensive application that requires high bandwidth or is computationally intensive is a candidate for running on an AMD GCN processor.

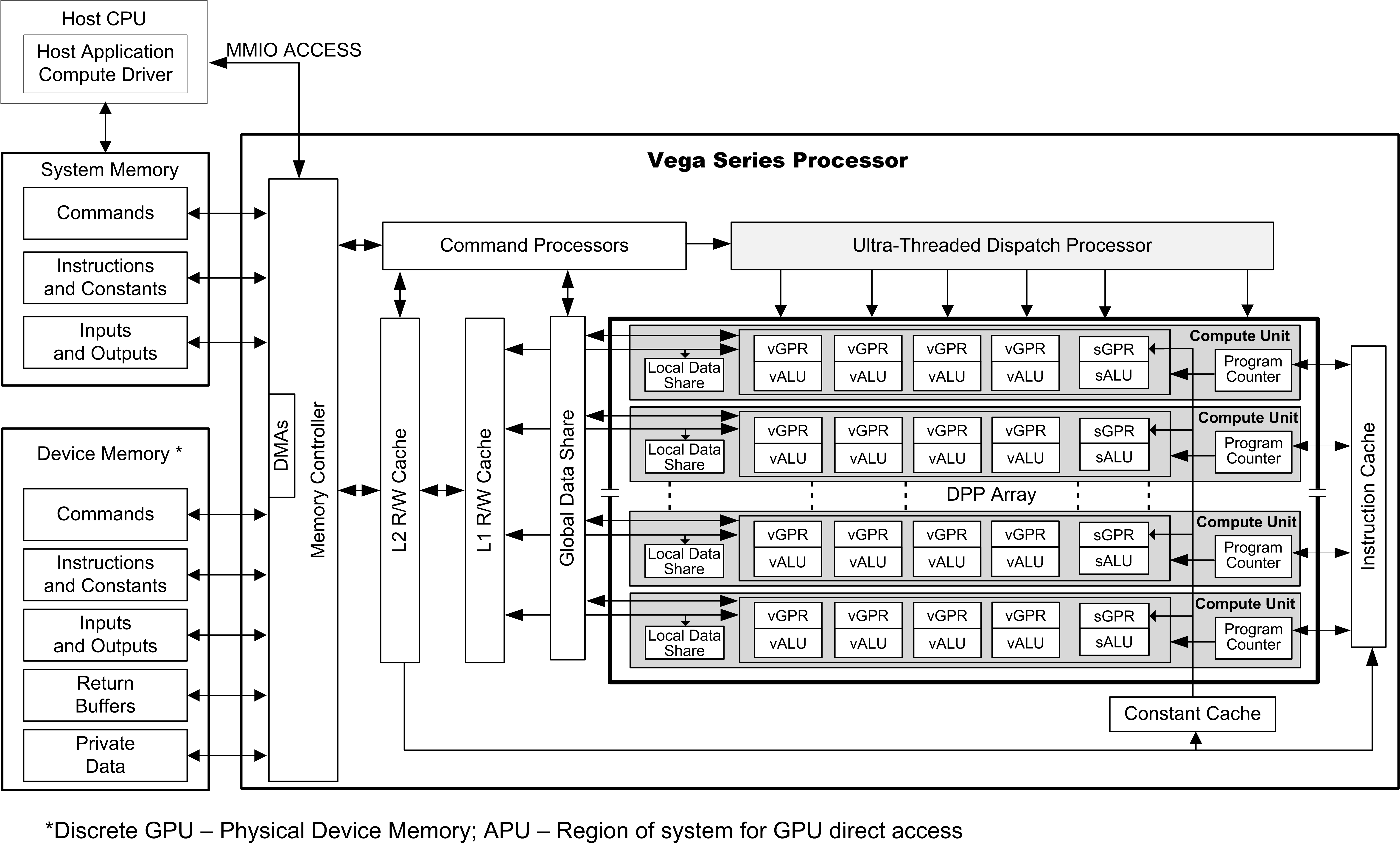

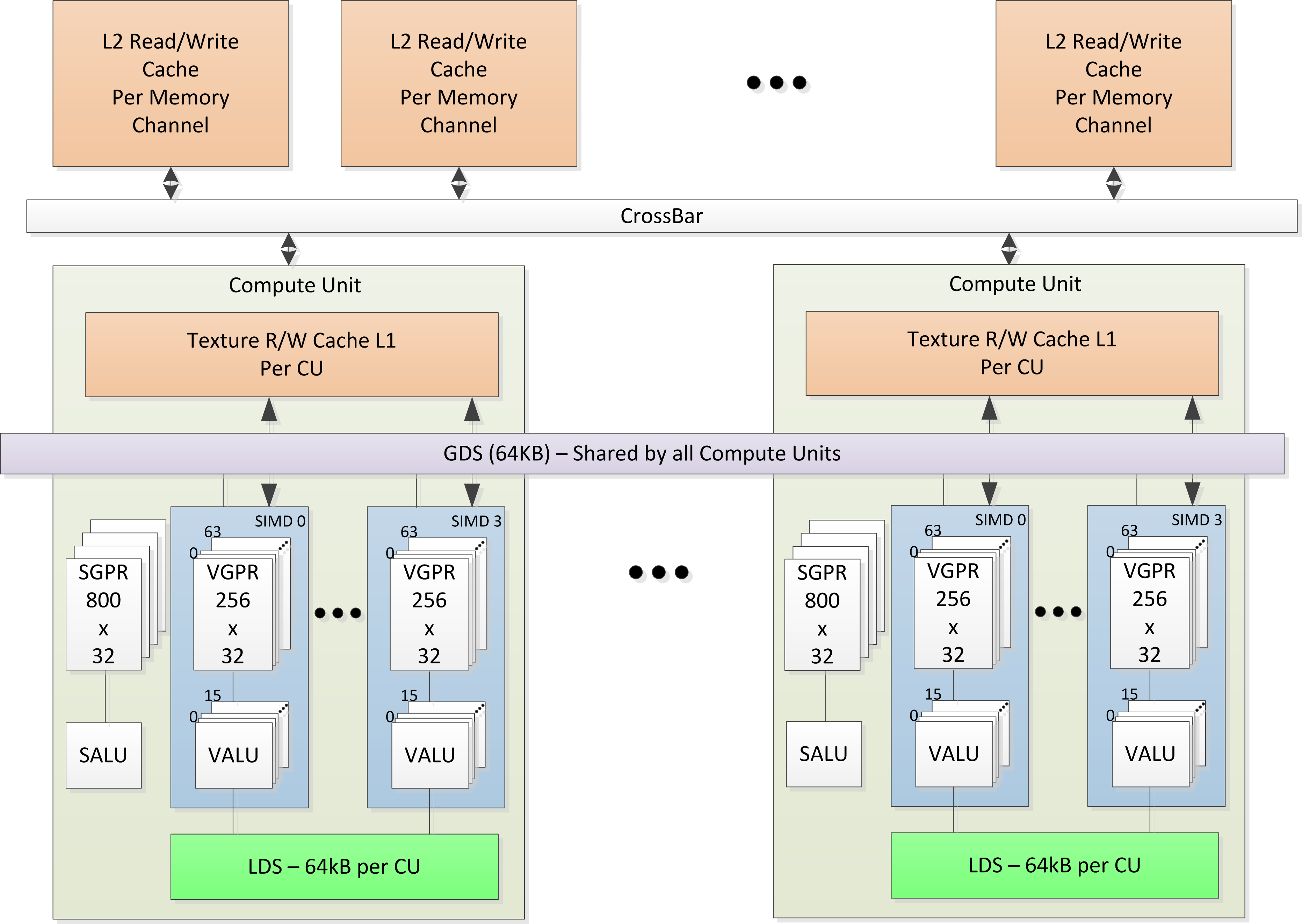

The figure below shows a block diagram of the AMD GCN Vega Generation series processors

AMD GCN VEGA Generation Series Block Diagram

The GCN device includes a data-parallel processor (DPP) array, a command processor, a memory controller, and other logic (not shown). The GCN command processor reads commands that the host has written to memory-mapped GCN registers in the system-memory address space. The command processor sends hardware-generated interrupts to the host when the command is completed. The GCN memory controller has direct access to all GCN device memory and the host-specified areas of system memory. To satisfy read and write requests, the memory controller performs the functions of a direct-memory access (DMA) controller, including computing memory-address offsets based on the format of the requested data in memory. In the GCN environment, a complete application includes two parts: - a program running on the host processor, and

programs, called kernels, running on the GCN processor.

The GCN programs are controlled by host commands that

set GCN internal base-address and other configuration registers,

specify the data domain on which the GCN GPU is to operate,

invalidate and flush caches on the GCN GPU, and

cause the GCN GPU to begin execution of a program.

The GCN driver program runs on the host.

The DPP array is the heart of the GCN processor. The array is organized as a set of compute unit pipelines, each independent from the others, that operate in parallel on streams of floating-point or integer data.The compute unit pipelines can process data or, through the memory controller, transfer data to, or from, memory. Computation in a compute unit pipeline can be made conditional. Outputs written to memory can also be made conditional.

When it receives a request, the compute unit pipeline loads instructions and data from memory, begins execution, and continues until the end of the kernel. As kernels are running, the GCN hardware automatically fetches instructions from memory into on-chip caches; GCN software plays no role in this. GCN kernels can load data from off-chip memory into on-chip general-purpose registers (GPRs) and caches.

The AMD GCN devices can detect floating point exceptions and can generate interrupts. In particular, they detect IEEE floating-point exceptions in hardware; these can be recorded for post-execution analysis. The software interrupts shown in the previous figure from the command processor to the host represent hardware-generated interrupts for signaling command-completion and related management functions.

The GCN processor hides memory latency by keeping track of potentially hundreds of work-items in different stages of execution, and by overlapping compute operations with memory-access operations.

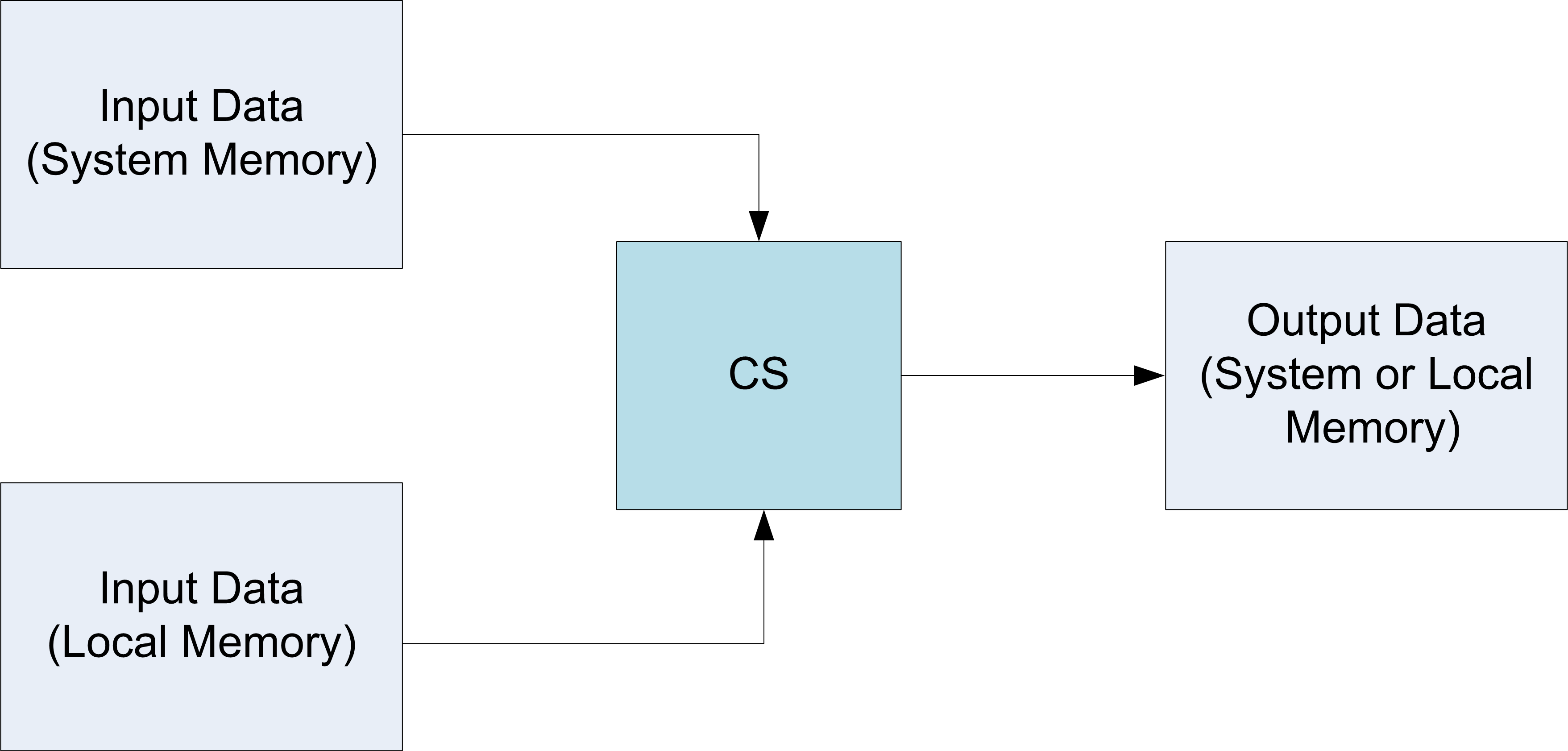

The figure below shows the dataflow for a GCN application. For general-purpose applications, only one processing block performs all computation.

GCN VEGA Generation Dataflow¶

Terminology¶

Term |

Description |

|---|---|

GCN Processor |

The Graphics Core Next shader processor is a scalar and vector ALU capable of running complex programs on behalf of a wavefront. |

Dispatch |

A dispatch launches a 1D, 2D, or 3D grid of work to the GCN processor array. |

Workgroup |

A workgroup is a collection of wavefronts that have the ability to synchronize with each other quickly; they also can share data through the Local Data Share. |

Wavefront |

A collection of 64 work-items that execute in parallel on a single GCN processor. |

Work-item |

A single element of work: one element from the dispatch grid, or in graphics a pixel or vertex. |

Literal Constant |

A 32-bit integer or float constant that is placed in the instruction stream. |

Scalar ALU (SALU) |

The scalar ALU operates on one value per wavefront and manages all control flow. |

Vector ALU (VALU) |

The vector ALU maintains Vector GPRs that are unique for each work item and execute arithmetic operations uniquely on each work-item. |

Microcode format |

The microcode format describes the bit patterns used to encode instructions. Each instruction is either 32 or 64 bits. |

Instruction |

An instruction is the basic unit of the kernel. Instructions include: vector ALU, scalar ALU, memory transfer, and control flow operations. |

Quad |

A quad is a 2x2 group of screen-aligned pixels. This is relevant for sampling texture maps. |

Texture Sampler |

A texture sampler is a 128-bit entity that describes how the vector memory system reads and samples (filters) a texture map. |

Texture Resource |

A texture resource describes a block of memory: address, data format, stride, etc. |

Table : Basic Terms Uses

Program Organization¶

GCN kernels are programs executed by the GCN processor. Conceptually, the kernel is executed independently on every work-item, but in reality the GCN processor groups 64 work-items into a wavefront, which executes the kernel on all 64 work-items in one pass.

The GCN processor consists of:

A scalar ALU, which operates on one value per wavefront (common to all work items).

A vector ALU, which operates on unique values per work-item.

Local data storage, which allows work-items within a workgroup to communicate and share data.

Scalar memory, which can transfer data between SGPRs and memory through a cache.

Vector memory, which can transfer data between VGPRs and memory,including sampling texture maps.

All kernel control flow is handled using scalar ALU instructions. This includes if/else, branches and looping. Scalar ALU (SALU) and memory instructions work on an entire wavefront and operate on up to two SGPRs,as well as literal constants.

Vector memory and ALU instructions operate on all work-items in the wavefront at one time. In order to support branching and conditional execute, every wavefront has an EXECute mask that determines which work-items are active at that moment, and which are dormant. Active work-items execute the vector instruction, and dormant ones treat the instruction as a NOP. The EXEC mask can be changed at any time by Scalar ALU instructions.

Vector ALU instructions can take up to three arguments, which can come from VGPRs, SGPRs, or literal constants that are part of the instruction stream. They operate on all work-items enabled by the EXEC mask. Vector compare and add with- carryout return a bit-per-work-item mask back to the SGPRs to indicate, per work-item, which had a “true” result from the compare or generated a carry-out.

Vector memory instructions transfer data between VGPRs and memory. Each work-item supplies its own memory address and supplies or receives unique data. These instructions are also subject to the EXEC mask.

Compute Shaders¶

Compute kernels (shaders) are generic programs that can run on the GCN processor, taking data from memory, processing it, and writing results back to memory. Compute kernels are created by a dispatch, which causes the GCN processors to run the kernel over all of the work-items in a 1D, 2D, or 3D grid of data. The GCN processor walks through this grid and generates wavefronts, which then run the compute kernel. Each work-item is initialized with its unique address (index) within the grid. Based on this index, the work-item computes the address of the data it is required to work on and what to do with the results.

Data Sharing¶

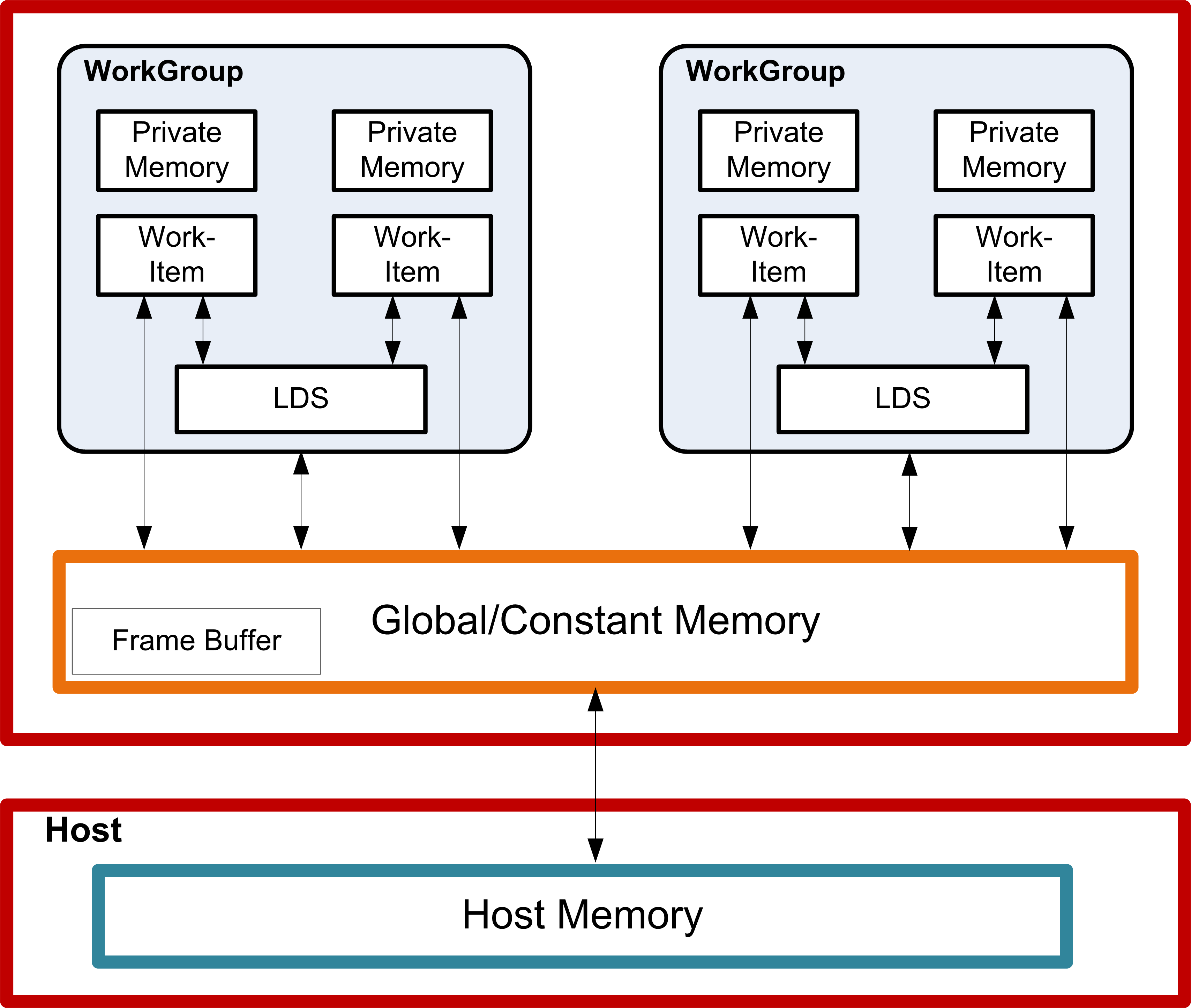

The AMD GCN stream processors can share data between different work-items. Data sharing can significantly boost performance. The figure below shows the memory hierarchy that is available to each work-item.

Shared Memory Hierarchy¶

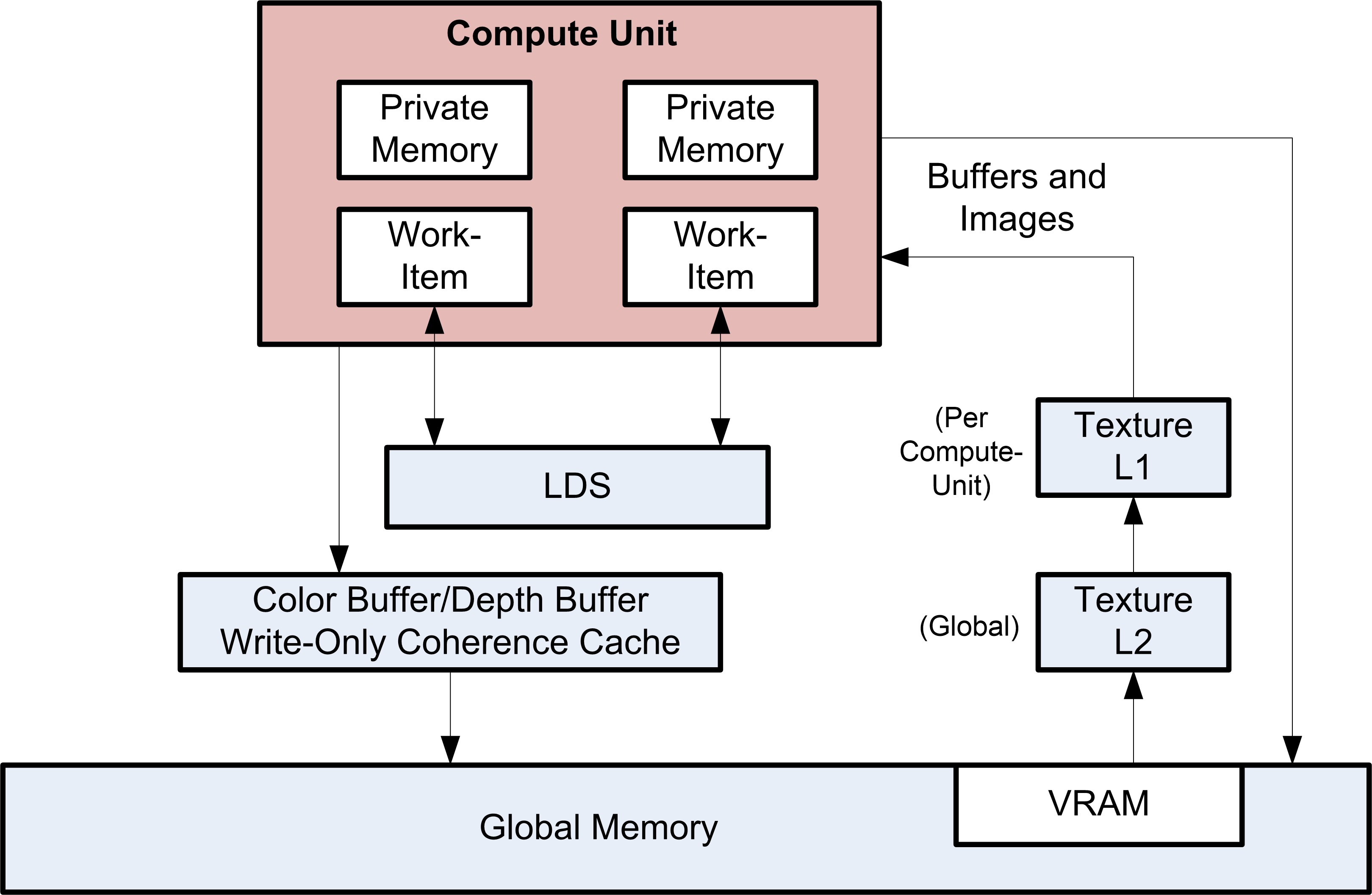

Device Memory¶

The AMD GCN devices offer several methods for access to off-chip memory from the processing elements (PE) within each compute unit. On the primary read path, the device consists of multiple channels of L2 read-only cache that provides data to an L1 cache for each compute unit. Special cache-less load instructions can force data to be retrieved from device memory during an execution of a load clause. Load requests that overlap within the clause are cached with respect to each other. The output cache is formed by two levels of cache: the first for write-combining cache (collect scatter and store operations and combine them to provide good access patterns to memory); the second is a read/write cache with atomic units that lets each processing element complete unordered atomic accesses that return the initial value. Each processing element provides the destination address on which the atomic operation acts, the data to be used in the atomic operation, and a return address for the read/write atomic unit to store the pre-op value in memory. Each store or atomic operation can be set up to return an acknowledgment to the requesting PE upon write confirmation of the return value (pre-atomic op value at destination) being stored to device memory.

This acknowledgment has two purposes:

enabling a PE to recover the pre-op value from an atomic operation by performing a cache-less load from its return address after receipt of the write confirmation acknowledgment, and

enabling the system to maintain a relaxed consistency model.

Each scatter write from a given PE to a given memory channel always maintains order. The acknowledgment enables one processing element to implement a fence to maintain serial consistency by ensuring all writes have been posted to memory prior to completing a subsequent write. In this manner, the system can maintain a relaxed consistency model between all parallel work-items operating on the system.

Kernel State¶

This chapter describes the kernel states visible to the shader program.

State Overview¶

The table below shows all of the hardware states readable or writable by a shader program.

Abbrev. |

Name |

Size (bits) |

Description |

|---|---|---|---|

PC |

Program Counter |

48 |

Points to the memory address of the next shader instruction to execute. |

V0-V255 |

VGPR |

32 |

Vector general-purpose register. |

S0-S103 |

SGPR |

32 |

Vector general-purpose register. |

LDS |

Local Data Share |

64kB |

Local data share is a scratch RAM with built-in arithmetic capabilities that allow data to be shared between threads in a workgroup. |

EXEC |

Execute Mask |

64 |

A bit mask with one bit per thread, which is applied to vector instructions and controls that threads execute and that ignore the instruction. |

EXECZ |

EXEC is zero |

1 |

A single bit flag indicating that the EXEC mask is all zeros. |

VCC |

Vector Condition Code |

64 |

A bit mask with one bit per thread; it holds the result of a vector compare operation. |

VCCZ |

VCC is zero |

1 |

A single bit-flag indicating that the VCC mask is all zeros. |

SCC |

Scalar Condition Code |

1 |

Result from a scalar ALU comparison instruction. |

FLAT_SCRATC H |

Flat scratch address |

64 |

The base address of scratch memory. |

XNACK_MASK |

Address translation failure. |

64 |

Bit mask of threads that have failed their address translation. |

STATUS |

Status |

32 |

Read-only shader status bits. |

MODE |

Mode |

32 |

Writable shader mode bits. |

M0 |

Memory Reg |

32 |

A temporary register that has various uses, including GPR indexing and bounds checking. |

TRAPSTS |

Trap Status |

32 |

Holds information about exceptions and pending traps. |

TBA |

Trap Base Address |

64 |

Holds the pointer to the current trap handler program. |

TMA |

Trap Memory Address |

64 |

Temporary register for shader operations. For example, can hold a pointer to memory used by the trap handler. |

TTMP0-TTMP15 |

Trap Temporary SGPRs |

32 |

16 SGPRs available only to the Trap Handler for temporary storage. |

VMCNT |

Vector memory instruction count |

6 |

Counts the number of VMEM instructions issued but not yet completed. |

EXPCNT |

Export Count |

3 |

Counts the number of Export and GDS instructions issued but not yet completed. Also counts VMEM writes that have not yet sent their write-data to the TC. |

LGKMCNT |

LDS, GDS, Constant and Message count |

4 |

Counts the number of LDS, GDS, constant-fetch (scalar memory read), and message instructions issued but not yet completed. |

Table : Readable and Writable Hardware States

Program Counter (PC)¶

The program counter (PC) is a byte address pointing to the next instruction to execute. When a wavefront is created, the PC is initialized to the first instruction in the program.

The PC interacts with three instructions: S_GET_PC, S_SET_PC,S_SWAP_PC. These transfer the PC to, and from, an even-aligned SGPR pair.

Branches jump to (PC_of_the_instruction_after_the_branch +offset). The shader program cannot directly read from, or write to, the PC. Branches, GET_PC and SWAP_PC, are PC-relative to the next instruction, not the current one. S_TRAP saves the PC of the S_TRAP instruction itself.

EXECute Mask¶

EXEC can be read from, and written to, through scalar instructions; it also can be written as a result of a vector-ALU compare. This mask affects vector-ALU, vector-memory, LDS, and export instructions. It does not affect scalar execution or branches.

A helper bit (EXECZ) can be used as a condition for branches to skip code when EXEC is zero.

Note

This GPU does no optimization when EXEC = 0. The shader hardware executes every instruction, wasting instruction issue bandwidth. Use CBRANCH or VSKIP to rapidly skip over code when it is likely that the EXEC mask is zero.

Status registers¶

Status register fields can be read, but not written to, by the shader.These bits are initialized at wavefront-creation time. The table below lists and briefly describes the status register fields.

Field |

Bit Positio n |

Description |

|---|---|---|

SCC |

1 |

Scalar condition code. Used as a carry-out bit. For a comparison instruction, this bit indicates failure or success. For logical operations, this is 1 if the result was non-zero. |

SPI_PRIO |

2:1 |

Wavefront priority set by the shader processor interpolator (SPI) when the wavefront is created. See the S_SETPRIO instruction (page 12-49) for details. 0 is lowest, 3 is highest priority. |

WAVE_PRIO |

4:3 |

Wavefront priority set by the shader program. See the S_SETPRIO instruction (page 12-49) for details. |

PRIV |

5 |

Privileged mode. Can only be active when in the trap handler. Gives write access to the TTMP, TMA, and TBA registers. |

TRAP_EN |

6 |

Indicates that a trap handler is present. When set to zero, traps are not taken. |

TTRACE_EN |

7 |

Indicates whether thread trace is enabled for this wavefront. If zero, also ignore any shader-generated (instruction) thread-trace data. |

EXPORT_RDY |

8 |

This status bit indicates if export buffer space has been allocated. The shader stalls any export instruction until this bit becomes 1. It is set to 1 when export buffer space has been allocated. Before a Pixel or Vertex shader can export, the hardware checks the state of this bit. If the bit is 1, export can be issued. If the bit is zero, the wavefront sleeps until space becomes available in the export buffer. Then, this bit is set to 1, and the wavefront resumes. |

EXECZ |

9 |

Exec mask is zero. |

VCCZ |

10 |

Vector condition code is zero. |

IN_TG |

11 |

Wavefront is a member of a work-group of more than one wavefront. |

IN_BARRIER |

12 |

Wavefront is waiting at a barrier. |

HALT |

13 |

Wavefront is halted or scheduled to halt. HALT can be set by the host through wavefront-control messages, or by the shader. This bit is ignored while in the trap handler (PRIV = 1); it also is ignored if a host-initiated trap is received (request to enter the trap handler). |

TRAP |

14 |

Wavefront is flagged to enter the trap handler as soon as possible. |

TTRACE_CU_EN |

15 |

Enables/disables thread trace for this compute unit (CU). This bit allows more than one CU to be outputting USERDATA (shader initiated writes to the thread-trace buffer). Note that wavefront data is only traced from one CU per shader array. Wavefront user data (instruction based) can be output if this bit is zero. |

VALID |

16 |

Wavefront is active (has been created and not yet ended). |

ECC_ERR |

17 |

An ECC error has occurred. |

SKIP_EXPORT |

18 |

For Vertex Shaders only. 1 = this shader is not allocated export buffer space; all export instructions are ignored (treated as NOPs). Formerly called VS_NO_ALLOC. Used for stream-out of multiple streams (multiple passes over the same VS), and for DS running in the VS stage for wavefronts that produced no primitives. |

PERF_EN |

19 |

Performance counters are enabled for this wavefront. |

COND_DBG_USER |

20 |

Conditional debug indicator for user mode |

COND_DBG_SYS |

21 |

Conditional debug indicator for system mode. |

ALLOW_REPLAY |

22 |

Indicates that ATC replay is enabled. |

MUST_EXPORT |

27 |

This wavefront is required to perform an export with Done=1 before terminating. |

Table : Status Register Fields

Mode register¶

Mode register fields can be read from, and written to, by the shader through scalar instructions. The table below lists and briefly describes the mode register fields.

Field |

Bit Positio n |

Description |

|---|---|---|

FP_ROUND |

3:0 |

[1:0] Single precision round mode. [3:2]

Double precision round mode.

Round Modes: 0=nearest even, 1= +infinity, 2=

-infinity, 3= toward zero.

|

FP_DENORM |

7:4 |

[1:0] Single denormal mode. [3:2] Double

denormal mode. Denorm modes:

0 = flush input and output denorms.

1 = allow input denorms, flush output denorms.

2 = flush input denorms, allow output denorms.

3 = allow input and output denorms.

|

DX10_CLAMP |

8 |

Used by the vector ALU to force DX10-style treatment of NaNs: when set, clamp NaN to zero; otherwise, pass NaN through. |

IEEE |

9 |

Floating point opcodes that support exception flag gathering quiet and propagate signaling NaN inputs per IEEE 754-2008. Min_dx10 and max_dx10 become IEEE 754-2008 compliant due to signaling NaN propagation and quieting. |

LOD_CLAMPED |

10 |

Sticky bit indicating that one or more texture accesses had their LOD clamped. |

DEBUG |

11 |

Forces the wavefront to jump to the exception handler after each instruction is executed (but not after ENDPGM). Only works if TRAP_EN = 1. |

EXCP_EN |

18:12 |

Enable mask for exceptions. Enabled means if

the exception occurs and TRAP_EN==1, a trap

is taken.

[12] : invalid.

[13] : inputDenormal.

[14] : float_div0.

[15] : overflow.

[16] : underflow.

[17] : inexact.

[18] : int_div0.

[19] : address watch

[20] : memory violation

|

FP16_OVFL |

23 |

If set, an overflowed FP16 result is clamped to +/- MAX_FP16, regardless of round mode, while still preserving true INF values. |

POPS_PACKER0 |

24 |

1 = this wave is associated with packer 0. User shader must set this to !PackerID from the POPS initialized SGPR (load_collision_waveID), or zero if not using POPS. |

POPS_PACKER1 |

25 |

1 = this wave is associated with packer 1. User shader must set this to PackerID from the POPS initialized SGPR (load_collision_waveID), or zero if not using POPS. |

DISABLE_PERF |

26 |

1 = disable performance counting for this wave |

GPR_IDX_EN |

27 |

GPR index enable. |

VSKIP |

28 |

0 = normal operation. 1 = skip (do not execute) any vector instructions: valu, vmem, export, lds, gds. “Skipping” instructions occurs at high-speed (10 wavefronts per clock cycle can skip one instruction). This is much faster than issuing and discarding instructions. |

CSP |

31:29 |

Conditional branch stack pointer. |

Table : Mode Register Fields

GPRs and LDS¶

This section describes how GPR and LDS space is allocated to a wavefront, as well as how out-of-range and misaligned accesses are handled.

Out-of-Range behavior¶

This section defines the behavior when a source or destination GPR or memory address is outside the legal range for a wavefront.

Out-of-range can occur through GPR-indexing or bad programming. It is illegal to index from one register type into another (for example: SGPRs into trap registers or inline constants). It is also illegal to index within inline constants.

The following describe the out-of-range behavior for various storage types.

SGPRs

Source or destination out-of-range = (sgpr < 0 || (sgpr >= sgpr_size)).

Source out-of-range: returns the value of SGPR0 (not the value 0).

Destination out-of-range: instruction writes no SGPR result.

VGPRs

Similar to SGPRs. It is illegal to index from SGPRs into VGPRs, or vice versa.

Out-of-range = (vgpr < 0 || (vgpr >= vgpr_size))

If a source VGPR is out of range, VGPR0 is used.

If a destination VGPR is out-of-range, the instruction is ignored (treated as an NOP).

LDS

If the LDS-ADDRESS is out-of-range (addr < 0 or > (MIN(lds_size, m0)):

Writes out-of-range are discarded; it is undefined if SIZE is not a multiple of write-data-size.

Reads return the value zero.

If any source-VGPR is out-of-range, use the VGPR0 value is used.

If the dest-VGPR is out of range, nullify the instruction (issue with exec=0)

Memory, LDS, and GDS: Reads and atomics with returns.

If any source VGPR or SGPR is out-of-range, the data value is undefined.

If any destination VGPR is out-of-range, the operation is nullified by issuing the instruction as if the EXEC mask were cleared to 0.

This out-of-range check must check all VGPRs that can be returned (for example: VDST to VDST+3 for a BUFFER_LOAD_DWORDx4).

This check must also include the extra PRT (partially resident texture) VGPR and nullify the fetch if this VGPR is out-of-range, no matter whether the texture system actually returns this value or not.

Atomic operations with out-of-range destination VGPRs are nullified: issued, but with exec mask of zero.

Instructions with multiple destinations (for example: V_ADDC): if any destination is out-of-range, no results are written.

SGPR Allocation and storage¶

A wavefront can be allocated 16 to 102 SGPRs, in units of 16 GPRs (Dwords). These are logically viewed as SGPRs 0-101. The VCC is physically stored as part of the wavefront’s SGPRs in the highest numbered two SGPRs (SGPR 106 and 107; the source/destination VCC is an alias for those two SGPRs). When a trap handler is present, 16 additional SGPRs are reserved after VCC to hold the trap addresses, as well as saved-PC and trap-handler temps. These all are privileged (cannot be written to unless privilege is set). Note that if a wavefront allocates 16 SGPRs, 2 SGPRs are normally used as VCC, the remaining 14 are available to the shader. Shader hardware does not prevent use of all 16 SGPRs.

SGPR Alignment¶

Even-aligned SGPRs are required in the following cases.

When 64-bit data is used. This is required for moves to/from 64-bit registers, including the PC.

When scalar memory reads that the address-base comes from an SGPR-pair (either in SGPR).

Quad-alignment is required for the data-GPR when a scalar memory read returns four or more Dwords. When a 64-bit quantity is stored in SGPRs, the LSBs are in SGPR[n], and the MSBs are in SGPR[n+1].

VGPR Allocation and Alignment¶

VGPRs are allocated in groups of four Dwords. Operations using pairs of VGPRs (for example: double-floats) have no alignment restrictions. Physically, allocations of VGPRs can wrap around the VGPR memory pool.

LDS Allocation and Clamping¶

LDS is allocated per work-group or per-wavefront when work-groups are not in use. LDS space is allocated to a work-group or wavefront in contiguous blocks of 128 Dwords on 128-Dword alignment. LDS allocations do not wrap around the LDS storage. All accesses to LDS are restricted to the space allocated to that wavefront/work-group.

Clamping of LDS reads and writes is controlled by two size registers, which contain values for the size of the LDS space allocated by SPI to this wavefront or work-group, and a possibly smaller value specified in the LDS instruction (size is held in M0). The LDS operations use the smaller of these two sizes to determine how to clamp the read/write addresses.

M# Memory Descriptor¶

There is one 32-bit M# (M0) register per wavefront, which can be used for:

Local Data Share (LDS)

Interpolation: holds { 1’b0, new_prim_mask[15:1], parameter_offset[15:0] } // in bytes

LDS direct-read offset and data type: { 13’b0, DataType[2:0], LDS_address[15:0] } // addr in bytes

LDS addressing for Memory/Vfetch → LDS: {16’h0, lds_offset[15:0]} // in bytes

Global Data Share (GDS)

{ base[15:0] , size[15:0] } // base and size are in bytes

Indirect GPR addressing for both vector and scalar instructions. M0 is an unsigned index.

Send-message value. EMIT/CUT use M0 and EXEC as the send-message data.

SCC: Scalar Condition code¶

Most scalar ALU instructions set the Scalar Condition Code (SCC) bit,indicating the result of the operation.

The SCC can be used as the carry-in for extended-precision integer arithmetic, as well as the selector for conditional moves and branches.

Vector Compares: VCC and VCCZ¶

Vector ALU comparisons always set the Vector Condition Code (VCC) register (1=pass, 0=fail). Also, vector compares have the option of setting EXEC to the VCC value.

There is also a VCC summary bit (vccz) that is set to 1 when the VCC result is zero. This is useful for early-exit branch tests. VCC is also set for selected integer ALU operations (carry-out).

Vector compares have the option of writing the result to VCC (32-bit instruction encoding) or to any SGPR (64-bit instruction encoding). VCCZ is updated every time VCC is updated: vector compares and scalar writes to VCC.

The EXEC mask determines which threads execute an instruction. The VCC indicates which executing threads passed the conditional test, or which threads generated a carry-out from an integer add or subtract.

V_CMP_* ⇒ VCC[n] = EXEC[n] & (test passed for thread[n])

VCC is always fully written; there are no partial mask updates.

Note

VCC physically resides in the SGPR register file, so when an instruction sources VCC, that counts against the limit on the total number of SGPRs that can be sourced for a given instruction. VCC physically resides in the highest two user SGPRs.

Shader Hazard with VCC The user/compiler must prevent a scalar-ALU write to the SGPR holding VCC, immediately followed by a conditional branch using VCCZ. The hardware cannot detect this, and inserts the one required wait state (hardware does detect it when the SALU writes to VCC, it only fails to do this when the SALU instruction references the SGPRs that happen to hold VCC).

Trap and Exception registers¶

Each type of exception can be enabled or disabled independently by setting, or clearing, bits in the TRAPSTS register’s EXCP_EN field.This section describes the registers which control and report kernel exceptions.

All Trap temporary SGPRs (TTMP*) are privileged for writes - they can be written only when in the trap handler (status.priv = 1). When not privileged, writes to these are ignored. TMA and TBA are read-only; they can be accessed through S_GETREG_B32.

When a trap is taken (either user initiated, exception or host initiated), the shader hardware generates an S_TRAP instruction. This loads trap information into a pair of SGPRS:

{TTMP1, TTMP0} = {3'h0, pc_rewind[3:0], HT[0],trapID[7:0], PC[47:0]}.

HT is set to one for host initiated traps, and zero for user traps (s_trap) or exceptions. TRAP_ID is zero for exceptions, or the user/host trapID for those traps. When the trap handler is entered, the PC of the faulting instruction will be: (PC - PC_rewind*4).

STATUS . TRAP_EN - This bit indicates to the shader whether or not a trap handler is present. When one is not present, traps are not taken, no matter whether they’re floating point, user-, or host-initiated traps. When the trap handler is present, the wavefront uses an extra 16 SGPRs for trap processing. If trap_en == 0, all traps and exceptions are ignored, and s_trap is converted by hardware to NOP.

MODE . EXCP_EN[8:0] - Floating point exception enables. Defines which exceptions and events cause a trap.

Bit |

Exception |

|---|---|

0 |

Invalid |

1 |

Input Denormal |

2 |

Divide by zero |

3 |

Overflow |

4 |

Underflow |

5 |

Inexact |

6 |

Integer divide by zero |

7 |

Address Watch - TC (L1) has witnessed a thread access to an ‘address of interest’ |

Trap Status register¶

The trap status register records previously seen traps or exceptions. It can be read and written by the kernel.

Field |

Bits |

Description |

|---|---|---|

EXCP |

8:0 |

Status bits of which exceptions have occurred.

These bits are sticky and accumulate results

until the shader program clears them. These bits

are accumulated regardless of the setting of

EXCP_EN. These can be read or written without

shader privilege. Bit Exception 0 invalid

1 Input Denormal

2 Divide by zero

3 overflow

4 underflow

5 inexact

6 integer divide by zero

7 address watch

8 memory violation

|

SAVECTX |

10 |

A bit set by the host command indicating that this wave must jump to its trap handler and save its context. This bit must be cleared by the trap handler using S_SETREG. Note - a shader can set this bit to 1 to cause a save-context trap, and due to hardware latency the shader may execute up to 2 additional instructions before taking the trap. |

ILLEGAL_INST |

11 |

An illegal instruction has been detected. |

ADDR_WATCH1-3 |

14:12 |

Indicates that address watch 1, 2, or 3 has been hit. Bit 12 is address watch 1; bit 13 is 2; bit 14 is 3. |

EXCP_CYCLE |

21:16 |

When a float exception occurs, this tells the

trap handler on which cycle the exception

occurred on. 0-3 for normal float operations,

0-7 for double float add, and 0-15 for double

float muladd or transcendentals. This register

records the cycle number of the first occurrence

of an enabled (unmasked) exception.

EXCP_CYCLE[1:0] Phase: threads 0-15 are in

phase 0, 48-63 in phase 3.

EXCP_CYCLE[3:2] Multi-slot pass.

EXCP_CYCLE[5:4] Hybrid pass: used for machines

running at lower rates.

|

DP_RATE |

31:29 |

Determines how the shader interprets the TRAP_STS.cycle. Different Vector Shader Processors (VSP) process instructions at different rates. |

Table : Exception Field Bits

Memory Violations¶

A Memory Violation is reported from:

LDS alignment error.

Memory read/write/atomic alignment error.

Flat access where the address is invalid (does not fall in any aperture).

Write to a read-only surface.

GDS alignment or address range error.

GWS operation aborted (semaphore or barrier not executed).

Memory violations are not reported for instruction or scalar-data accesses.

Memory Buffer to LDS does NOT return a memory violation if the LDS address is out of range, but masks off EXEC bits of threads that would go out of range.

When a memory access is in violation, the appropriate memory (LDS or TC) returns MEM_VIOL to the wave. This is stored in the wave’s TRAPSTS.mem_viol bit. This bit is sticky, so once set to 1, it remains at 1 until the user clears it.

There is a corresponding exception enable bit (EXCP_EN.mem_viol). If this bit is set when the memory returns with a violation, the wave jumps to the trap handler.

Memory violations are not precise. The violation is reported when the LDS or TC processes the address; during this time, the wave may have processed many more instructions. When a mem_viol is reported, the Program Counter saved is that of the next instruction to execute; it has no relationship the faulting instruction.

Program Flow Control¶

All program flow control is programmed using scalar ALU instructions. This includes loops, branches, subroutine calls, and traps. The program uses SGPRs to store branch conditions and loop counters. Constants can be fetched from the scalar constant cache directly into SGPRs.

Program Control¶

The instructions in the table below control the priority and termination of a shader program, as well as provide support for trap handlers.

Instructions |

Description |

|---|---|

S_ENDPGM |

Terminates the wavefront. It can appear anywhere in the kernel and can appear multiple times. |

S_ENDPGM_SAVED |

Terminates the wavefront due to context save. It can appear anywhere in the kernel and can appear multiple times. |

S_NOP |

Does nothing; it can be repeated in hardware up to eight times. |

S_TRAP |

Jumps to the trap handler. |

S_RFE |

Returns from the trap handler |

S_SETPRIO |

Modifies the priority of this wavefront: 0=lowest, 3 = highest. |

S_SLEEP |

Causes the wavefront to sleep for 64 - 960 clock cycles. |

S_SENDMSG |

Sends a message (typically an interrupt) to the host CPU. |

Table : Control Instructions

Branching¶

Branching is done using one of the following scalar ALU instructions.

Instructions |

Description |

|---|---|

S_BRANCH |

Unconditional branch. |

S_CBRANCH_<test> |

Conditional branch. Branch only if <test> is true. Tests are VCCZ, VCCNZ, EXECZ, EXECNZ, SCCZ, and SCCNZ. |

S_CBRANCH_CDBGSYS |

Conditional branch, taken if the COND_DBG_SYS status bit is set. |

S_CBRANCH_CDBGUSER |

Conditional branch, taken if the COND_DBG_USER status bit is set. |

S_CBRANCH_CDBGSYS_AND_USER |

Conditional branch, taken only if both COND_DBG_SYS and COND_DBG_USER are set. |

S_SETPC |

Directly set the PC from an SGPR pair. |

S_SWAPPC |

Swap the current PC with an address in an SGPR pair. |

S_GETPC |

Retrieve the current PC value (does not cause a branch). |

S_CBRANCH_FORK and S_CBRANCH_JOIN |

Conditional branch for complex branching. |

S_SETVSKIP |

Set a bit that causes all vector instructions to be ignored. Useful alternative to branching. |

S_CALL_B64 |

Jump to a subroutine, and save return address. SGPR_pair = PC+4; PC = PC+4+SIMM16*4. |

Table : Branch Instructions

For conditional branches, the branch condition can be determined by either scalar or vector operations. A scalar compare operation sets the Scalar Condition Code (SCC), which then can be used as a conditional branch condition. Vector compare operations set the VCC mask, and VCCZ or VCCNZ then can be used to determine branching.

Workgroups¶

Work-groups are collections of wavefronts running on the same compute unit which can synchronize and share data. Up to 16 wavefronts (1024 work-items) can be combined into a work-group. When multiple wavefronts are in a workgroup, the S_BARRIER instruction can be used to force each wavefront to wait until all other wavefronts reach the same instruction; then, all wavefronts continue. Any wavefront can terminate early using S_ENDPGM, and the barrier is considered satisfied when the remaining live waves reach their barrier instruction.

Data Dependency Resolution¶

Shader hardware resolves most data dependencies, but a few cases must be explicitly handled by the shader program. In these cases, the program must insert S_WAITCNT instructions to ensure that previous operations have completed before continuing.

The shader has three counters that track the progress of issued instructions. S_WAITCNT waits for the values of these counters to be at, or below, specified values before continuing.

These allow the shader writer to schedule long-latency instructions,execute unrelated work, and specify when results of long-latency operations are needed.

Instructions of a given type return in order, but instructions of different types can complete out-of-order. For example, both GDS and LDS instructions use LGKM_cnt, but they can return out-of-order.

- VM_CNT: Vector memory count.Determines when memory reads have returned data to VGPRs, or memory writes have completed.

Incremented every time a vector-memory read or write (MIMG, MUBUF, or MTBUF format) instruction is issued.

Decremented for reads when the data has been written back to the VGPRs, and for writes when the data has been written to the L2 cache. Ordering: Memory reads and writes return in the order they were issued, including mixing reads and writes.

LGKM_CNT: (LDS, GDS, (K)constant, (M)essage) Determines when one of these low-latency instructions have completed.

Incremented by 1 for every LDS or GDS instruction issued, as well as by Dword-count for scalar-memory reads. For example, s_memtime counts the same as an s_load_dwordx2.

Decremented by 1 for LDS/GDS reads or atomic-with-return when the data has been returned to VGPRs.

Incremented by 1 for each S_SENDMSG issued. Decremented by 1 when message is sent out.

Decremented by 1 for LDS/GDS writes when the data has been written to LDS/GDS.

- Decremented by 1 for each Dword returned from the data-cache (SMEM).Ordering:

Instructions of different types are returned out-of-order.

Instructions of the same type are returned in the order they were issued, except scalar-memory-reads, which can return out-of-order (in which case only S_WAITCNT 0 is the only legitimate value).

- EXP_CNT: VGPR-export count.Determines when data has been read out of the VGPR and sent to GDS, at which time it is safe to overwrite the contents of that VGPR.

Incremented when an Export/GDS instruction is issued from the wavefront buffer.

Decremented for exports/GDS when the last cycle of the export instruction is granted and executed (VGPRs read out). Ordering

Exports are kept in order only within each export type (color/null, position, parameter cache).

Manually Inserted Wait States (NOPs)¶

The hardware does not check for the following dependencies; they must be resolved by inserting NOPs or independent instructions.

First Instruction |

Second Instruction |

Wait |

Notes |

|---|---|---|---|

S_SETREG <*> |

S_GETREG <same reg> |

2 |

|

S_SETREG <*> |

S_SETREG <same reg> |

2 |

|

SET_VSKIP |

S_GETREG MODE |

2 |

Reads VSKIP from MODE. |

S_SETREG MODE.vskip |

any vector op |

2 |

Requires two nops or non-vector instructions. |

VALU that sets VCC or EXEC |

VALU that uses EXECZ or VCCZ as a data source |

5 |

|

VALU writes SGPR/VCC (readlane, cmp, add/sub, div_scale) |

V_{READ,WRITE}LANE using that SGPR/VCC as the lane select |

4 |

|

VALU writes VCC (including v_div_scale) |

V_DIV_FMAS |

4 |

|

3

|

Write VGPRs holding writedata from those instructions. |

1 |

BUFFER_STORE_* operations that use an SGPR for “offset” do not require any wait states. IMAGE_STORE_* and IMAGE_{F}CMPSWAP* ops with more than two DMASK bits set require this one wait state. Ops that use a 256-bit T# do not need a wait state. |

VALU writes SGPR |

VMEM reads that SGPR |

5 |

Hardware assumes that there is no dependency here. If the VALU writes the SGPR that is used by a VMEM, the user must add five wait states. |

SALU writes M0 |

GDS, S_SENDMSG or S_TTRACE_DATA |

1 |

|

VALU writes VGPR |

VALU DPP reads that VGPR |

2 |

|

VALU writes EXEC |

VALU DPP op |

5 |

ALU does not forward EXEC to DPP. |

Mixed use of VCC: alias vs SGPR# v_readlane, v_readfirstlane v_cmp v_add*i/u v_sub*_i/u v_div_scale* (writes vcc) |

VALU which reads VCC as a constant (not as a carry-in which is 0 wait states). |

1 |

VCC can be accessed by name or by the logical SGPR which holds VCC. The data dependency check logic does not understand that these are the same register and do not prevent races. |

S_SETREG TRAPSTS |

RFE, RFE_restore |

1 |

|

SALU writes M0 |

LDS “add-TID” instruction, buffer_store_LDS_ dword, scratch or global with LDS = 1, VINTERP or LDS_direct |

1 |

|

SALU writes M0 |

S_MOVEREL |

1 |

Table : Required Software-inserted Wait States

Arbitrary Divergent Control Flow¶

In the GCN architecture, conditional branches are handled in one of the following ways.

S_CBRANCH This case is used for simple control flow, where the decision to take a branch is based on a previous compare operation. This is the most common method for conditional branching.

S_CBRANCH_I/G_FORK and S_CBRANCH_JOIN This method, intended for complex, irreducible control flow graphs, is described in the rest of this section. The performance of this method is lower than that for S_CBRANCH on simple flow control; use it only when necessary.

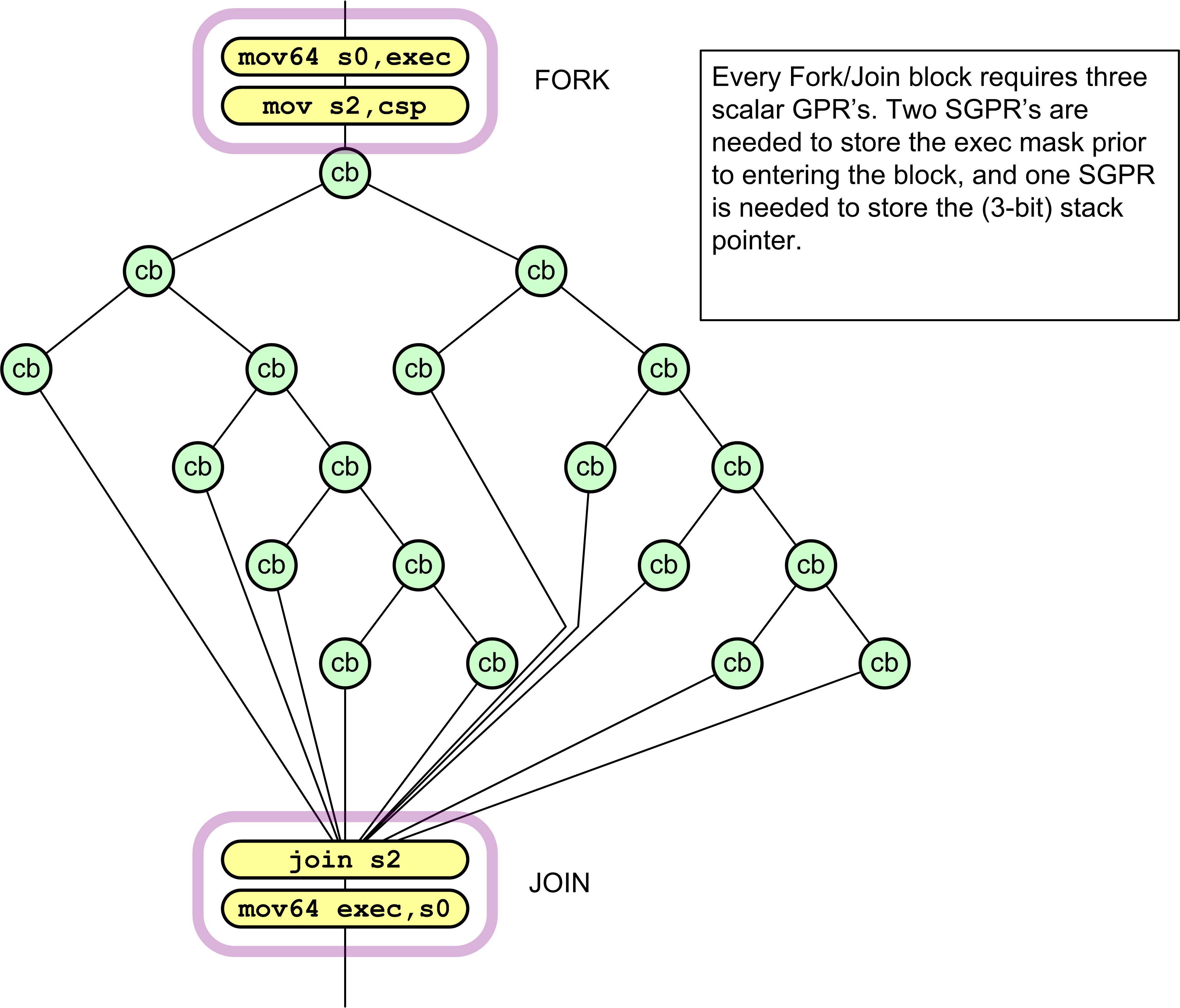

Conditional Branch (CBR) graphs are grouped into self-contained code blocks, denoted by FORK at the entrance point, and JOIN and the exit point. The shader compiler must add these instructions into the code.This method uses a six-deep stack and requires three SGPRs for each fork/join block. Fork/Join blocks can be hierarchically nested to any depth (subject to SGPR requirements); they also can coexist with other conditional flow control or computed jumps.

Example of Complex Control Flow Graph¶

The register requirements per wavefront are:

CSP [2:0] - control stack pointer.

Six stack entries of 128-bits each, stored in SGPRS: { exec[63:0], PC[47:2] }

This method compares how many of the 64 threads go down the PASS path instead of the FAIL path; then, it selects the path with the fewer number of threads first. This means at most 50% of the threads are active, and this limits the necessary stack depth to Log264 = 6.

The following pseudo-code shows the details of CBRANCH Fork and Join operations.

S_CBRANCH_G_FORK arg0, arg1

// arg1 is an sgpr-pair which holds 64bit (48bit) target address

S_CBRANCH_I_FORK arg0, #target_addr_offset[17:2]

// target_addr_offset: 16b signed immediate offset

// PC: in this pseudo-code is pointing to the cbranch_*_fork instruction

mask_pass = SGPR[arg0] & exec

mask_fail = ~SGPR[arg0] & exec

if (mask_pass == exec)

I_FORK : PC += 4 + target_addr_offset

G_FORK: PC = SGPR[arg1]

else if (mask_fail == exec)

PC += 4

else if (bitcount(mask_fail) < bitcount(mask_pass))

exec = mask_fail

I_FORK : SGPR[CSP*4] = { (pc + 4 + target_addr_offset), mask_pass }

G_FORK: SGPR[CSP*4] = { SGPR[arg1], mask_pass }

CSP++

PC += 4

else

exec = mask_pass

SGPR[CSP*4] = { (pc+4), mask_fail }

CSP++

I_FORK : PC += 4 + target_addr_offset

G_FORK: PC = SGPR[arg1]

S_CBRANCH_JOIN arg0

if (CSP == SGPR[arg0]) // SGPR[arg0] holds the CSP value when the FORK started

PC += 4 // this is the 2nd time to JOIN: continue with pgm

else

CSP -- // this is the 1st time to JOIN: jump to other FORK path

{PC, EXEC} = SGPR[CSP*4] // read 128-bits from 4 consecutive SGPRs

Scalar ALU Operations¶

Scalar ALU (SALU) instructions operate on a single value per wavefront.These operations consist of 32-bit integer arithmetic and 32- or 64-bit bit-wise operations. The SALU also can perform operations directly on the Program Counter, allowing the program to create a call stack in SGPRs. Many operations also set the Scalar Condition Code bit (SCC) to indicate the result of a comparison, a carry-out, or whether the instruction result was zero.

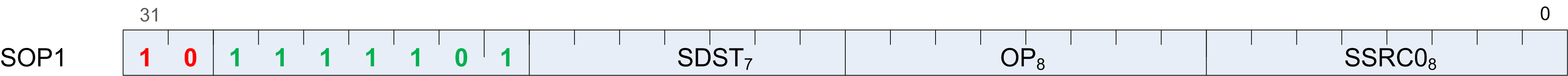

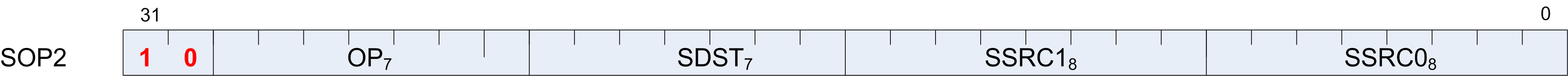

SALU Instruction Formats¶

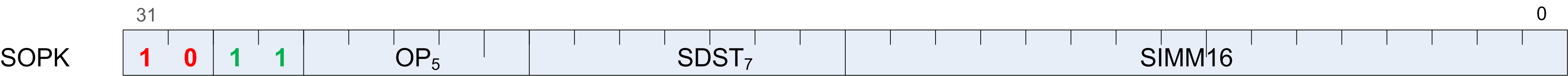

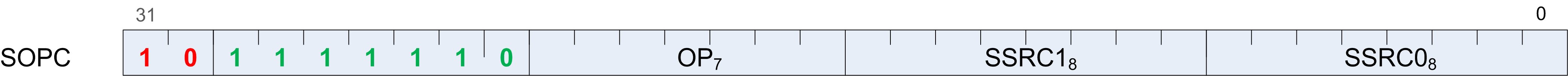

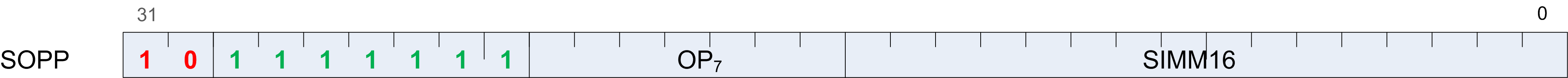

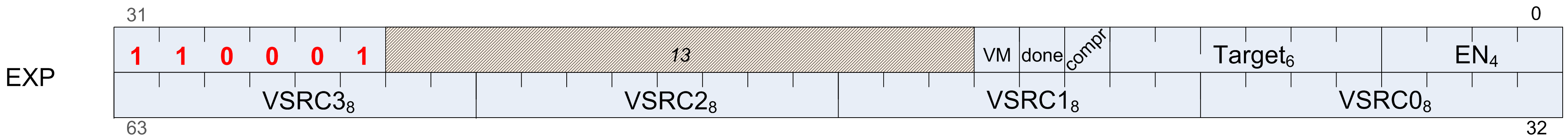

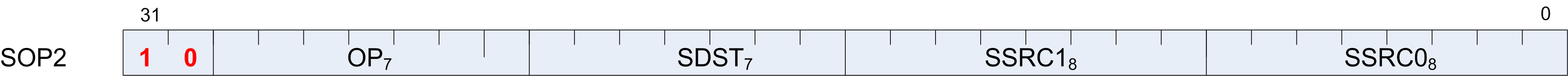

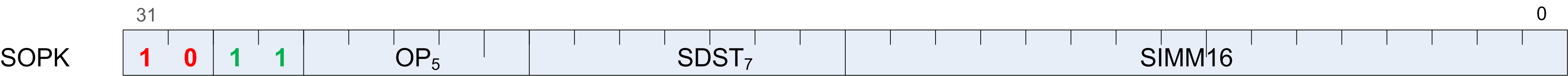

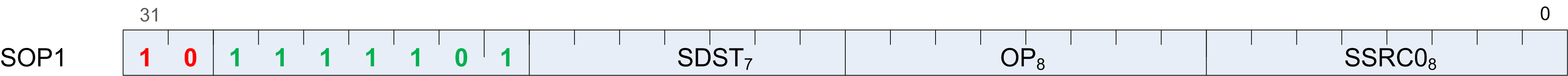

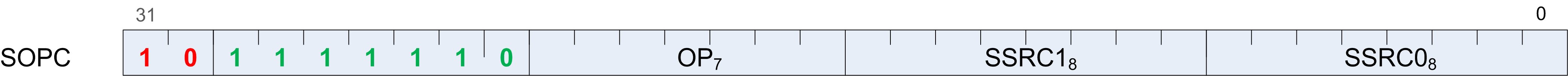

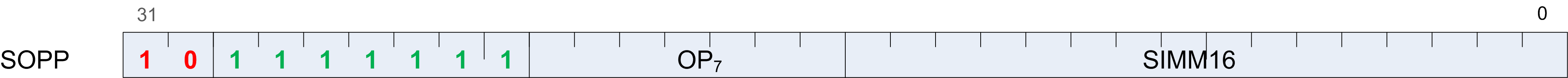

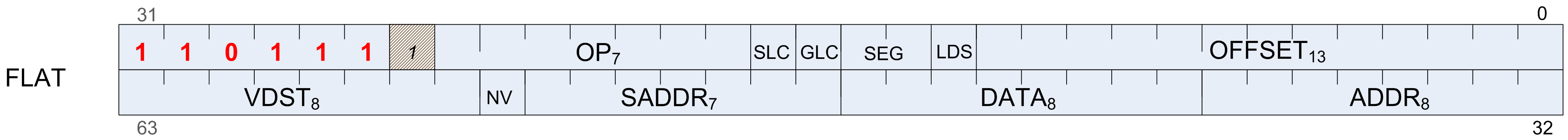

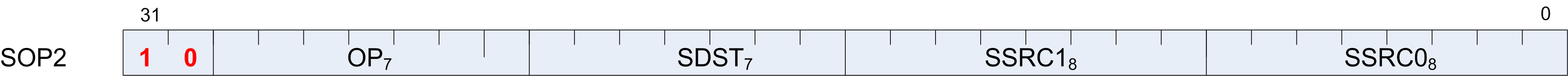

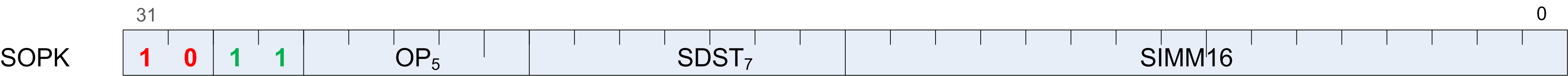

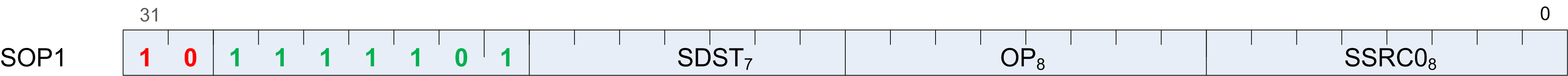

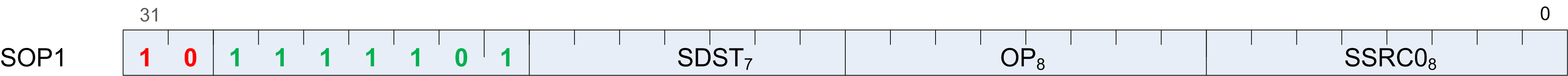

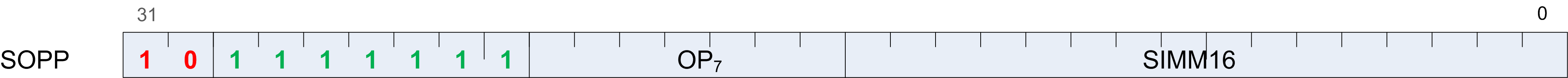

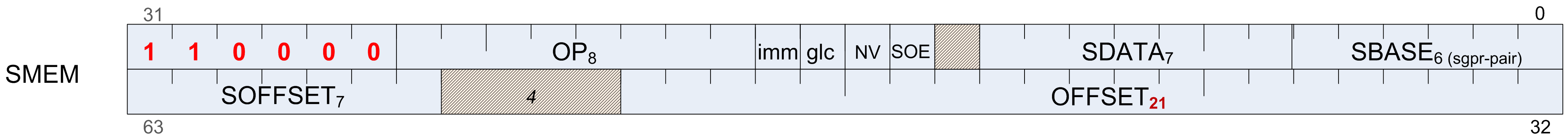

SALU instructions are encoded in one of five microcode formats, shown below:

Each of these instruction formats uses some of these fields:

Field |

Description |

|---|---|

OP |

Opcode: instruction to be executed. |

SDST |

Destination SGPR. |

SSRC0 |

First source operand. |

SSRC1 |

Second source operand. |

SIMM16 |

Signed immediate 16-bit integer constant. |

The lists of similar instructions sometimes use a condensed form using curly braces { } to express a list of possible names. For example, S_AND_{B32, B64} defines two legal instructions: S_AND_B32 and S_AND_B64.

Scalar ALU Operands¶

Valid operands of SALU instructions are:

SGPRs, including trap temporary SGPRs.

Mode register.

Status register (read-only).

M0 register.

TrapSts register.

EXEC mask.

VCC mask.

SCC.

PC.

Inline constants: integers from -16 to 64, and a some floating point values.

VCCZ, EXECZ, and SCC.

Hardware registers.

32-bit literal constant.

In the table below, 0-127 can be used as scalar sources or destinations; 128-255 can only be used as sources.

Code scalar Dest (0-7 bits) |

Meaning |

Description |

|---|---|---|

0-101 |

SGPR 0 to 101 |

Scalar GPRs |

102 |

FLAT_SCR |

Holds the low_LO Dword of the flatscratch memory descriptor |

103 |

FLAT_SCR |

Holds the high_HI Dword of the flatscratch memory descriptor |

104 |

XNACK_MA |

Holds the lowSK_LO Dword of the XNACK mask |

105 |

XNACK_MA |

Holds the high SK_HI Dword of the XNACK mask |

106 |

VCC_LO |

Holds the low Dword of the vector condition code |

107 |

VCC_HI |

Holds the high Dword of the vector condition code |

108-123 |

TTMP0 to Trap temps |

TTMP15 (privileged) |

124 |

M0 |

Holds the low Dword of the flatscratch memory descriptor |

125 |

reserved |

reserved |

126 |

EXEC_LO |

Execute mask, low Dword |

127 |

EXEC_HI |

Execute mask, high Dword |

128 |

0 |

zero |

129-192 |

int 1 to 64 |

Positive integer values. |

193-208 |

int 1 to 16 |

Negative integer values. |

209-234 |

reserved |

Unused. |

235 |

SHARED_BASE |

Memory Aperture definition. |

236 |

SHARED_LIMIT |

|

237 |

PRIVATE_BASE |

|

238 |

PRIVATE_LIMIT |

|

239 |

POPS_EXITING_WAV |

Primitive Ordered Pixel Shading E_IDwave ID. |

240 |

0.5 |

single or double floats |

241 |

0.5 |

|

242 |

1.0 |

|

243 |

1.0 |

|

244 |

2.0 |

|

245 |

2.0 |

|

246 |

4.0 |

|

247 |

4.0 |

|

248 |

1.0 / (2 * PI) |

|

249-250 |

reserved |

unused |

251 |

VCCZ |

{ zeros, VCCZ } |

252 |

EXECZ |

{ zeros, EXECZ} |

253 |

SCC |

{ zeros, SCC } |

254 |

reserved |

unused |

255 |

Literal |

constant 32bit constant from instruction stream. |

Table : Scalar Operands

The SALU cannot use VGPRs or LDS. SALU instructions can use a 32-bit literal constant. This constant is part of the instruction stream and is available to all SALU microcode formats except SOPP and SOPK. Literal constants are used by setting the source instruction field to “literal” (255), and then the following instruction dword is used as the source value.

If any source SGPR is out-of-range, the value of SGPR0 is used instead.

If the destination SGPR is out-of-range, no SGPR is written with the result. However, SCC and possibly EXEC (if saveexec) will still be written.

If an instruction uses 64-bit data in SGPRs, the SGPR pair must be aligned to an even boundary. For example, it is legal to use SGPRs 2 and 3 or 8 and 9 (but not 11 and 12) to represent 64-bit data.

Scalar Condition Code (SCC)¶

The scalar condition code (SCC) is written as a result of executing most SALU instructions.

The SCC is set by many instructions:

Compare operations: 1 = true.

Arithmetic operations: 1 = carry out.

SCC = overflow for signed add and subtract operations. For add, overflow = both operands are of the same sign, and the MSB (sign bit) of the result is different than the sign of the operands. For subtract (AB), overflow = A and B have opposite signs and the resulting sign is not the same as the sign of A.

Bit/logical operations: 1 = result was not zero.

Integer Arithmetic Instructions¶

This section describes the arithmetic operations supplied by the SALU. The table below shows the scalar integer arithmetic instructions:

Instruction |

Encoding |

Sets SCC? |

Operation |

|---|---|---|---|

S_ADD_I32 |

SOP2 |

y |

D = S0 + S1, SCC = overflow. |

S_ADD_U32 |

SOP2 |

y |

D = S0 + S1, SCC = carry out. |

S_ADDC_U32 |

SOP2 |

y |

D = S0 + S1 + SCC = overflow. |

S_SUB_I32 |

SOP2 |

y |

D = S0 - S1, SCC = overflow. |

S_SUB_U32 |

SOP2 |

y |

D = S0 - S1, SCC = carry out. |

S_SUBB_U32 |

SOP2 |

y |

D = S0 - S1 - SCC = carry out. |

S_ABSDIFF_I32 |

SOP2 |

y |

D = abs (s1 - s2), SCC = result not zero. |

S_MIN_I32

S_MIN_U32

|

SOP2 |

y |

D = (S0 < S1) ? S0 : S1. SCC = 1 if S0 was min. |

S_MAX_I32

S_MAX_U32

|

SOP2 |

y |

D = (S0 > S1) ? S0 : S1. SCC = 1 if S0 was max. |

S_MUL_I32 |

SOP2 |

n |

D = S0 * S1. Low 32 bits of result. |

S_ADDK_I32 |

SOPK |

y |

D = D + simm16, SCC = overflow. Sign extended version of simm16. |

S_MULK_I32 |

SOPK |

n |

D = D * simm16. Return low 32bits. Sign extended version of simm16. |

S_ABS_I32 |

SOP1 |

y |

D.i = abs (S0.i). SCC=result not zero. |

S_SEXT_I32_I8 |

SOP1 |

n |

D = { 24{S0[7]}, S0[7:0] }. |

S_SEXT_I32_I16 |

SOP1 |

n |

D = { 16{S0[15]}, S0[15:0] }. |

Table: Integer Arithmetic Instructions

Conditional Instructions¶

Conditional instructions use the SCC flag to determine whether to perform the operation, or (for CSELECT) which source operand to use.

Instruction |

Encoding |

Sets SCC? |

Operation |

|---|---|---|---|

S_CSELECT_{B32, B64} |

SOP2 |

n |

D = SCC ? S0 : S1. |

S_CMOVK_I 32 |

SOPK |

n |

if (SCC) D = signext(simm16). |

S_CMOV_{B 32,B64} |

SOP1 |

n |

if (SCC) D = S0, else NOP. |

Table : Conditional Instructions

Comparison Instructions¶

These instructions compare two values and set the SCC to 1 if the comparison yielded a TRUE result.

Instruction |

Encoding |

Sets SCC? |

Operation |

|---|---|---|---|

S_CMP_EQ_U64, S_CMP_NE_U64 |

SOPC |

y |

Compare two 64-bit source values. SCC = S0 <cond> S1. |

S_CMP_{EQ,NE,GT,GE ,LE,LT}_{I32,U32} |

SOPC |

y |

Compare two source values. SCC = S0 <cond> S1. |

S_CMPK_{EQ,NE,GT,G E,LE,LT}_{I32,U32} |

SOPK |

y |

Compare Dest SGPR to a constant. SCC = DST <cond> simm16. simm16 is zero-extended (U32) or sign-extended (I32). |

S_BITCMP0_{B32,B64 } |

SOPC |

y |

Test for “is a bit zero”. SCC = !S0[S1]. |

S_BITCMP1_{B32,B64 } |

SOPC |

y |

Test for “is a bit one”. SCC = S0[S1]. |

Table : Conditional Instructions

Bit-Wise Instructions¶

Bit-wise instructions operate on 32- or 64-bit data without interpreting it has having a type. For bit-wise operations if noted in the table below, SCC is set if the result is nonzero.

Instruction |

Encodin g |

Sets SCC? |

Operation |

|---|---|---|---|

S_MOV_{B32,B64} |

SOP1 |

n |

D = S0 |

S_MOVK_I32 |

SOPK |

n |

D = signext(simm16) |

{S_AND,S_OR,S_XOR}_{B 32,B64} |

SOP2 |

y |

D = S0 & S1, S0 OR S1, S0 XOR S1 |

{S_ANDN2,S_ORN2}_{B32, B64} |

SOP2 |

y |

D = S0 & ~S1, S0 OR ~S1, S0 XOR ~S1, |

{S_NAND,S_NOR,S_XNOR}_{B32,B64} |

SOP2 |

y |

D = ~(S0 & S1), ~(S0 OR S1), ~(S0 XOR S1) |

S_LSHL_{B32,B64} |

SOP2 |

y |

D = S0 << S1[4:0], [5:0] for B64. |

S_LSHR_{B32,B64} |

SOP2 |

y |

D = S0 >> S1[4:0], [5:0] for B64. |

S_ASHR_{I32,I64} |

SOP2 |

y |

D = sext(S0 >> S1[4:0]) ([5:0] for I64). |

S_BFM_{B32,B64} |

SOP2 |

n |

Bit field mask. D = ((1 << S0[4:0]) - 1) << S1[4:0]. |

S_BFE_U32,

S_BFE_U64

S_BFE_I32,

S_BFE_I64

(signed/unsigned)

|

SOP2 |

n |

Bit Field Extract, then

sign-extend result for I32/64

instructions.

S0 = data,

S1[5:0] = offset, S1[22:16]=

width.

|

S_NOT_{B32,B64} |

SOP1 |

y |

D = ~S0. |

S_WQM_{B32,B64} |

SOP1 |

y |

D = wholeQuadMode(S0). If any bit in a group of four is set to 1, set the resulting group of four bits all to 1. |

S_QUADMASK_{B32,B64} |

SOP1 |

y |

D[0] = OR(S0[3:0]), D[1]=OR(S0[7:4]), etc. |

S_BREV_{B32,B64} |

SOP1 |

n |

D = S0[0:31] are reverse bits. |

S_BCNT0_I32_{B32,B64} |

SOP1 |

y |

D = CountZeroBits(S0). |

S_BCNT1_I32_{B32,B64} |

SOP1 |

y |

D = CountOneBits(S0). |

S_FF0_I32_{B32,B64} |

SOP1 |

n |

D = Bit position of first zero in S0 starting from LSB. -1 if not found. |

S_FF1_I32_{B32,B64} |

SOP1 |

n |

D = Bit position of first one in S0 starting from LSB. -1 if not found. |

S_FLBIT_I32_{B32,B64} |

SOP1 |

n |

Find last bit. D = the number of zeros before the first one starting from the MSB. Returns -1 if none. |

S_FLBIT_I32

S_FLBIT_I32_I64

|

SOP1 |

n |

Count how many bits in a row

(from MSB to LSB) are the same

as the sign bit. Return -1 if

the input is zero or all 1’s

(-1). 32-bit pseudo-code:

if (S0 == 0 || S0 == -1) D =

-1

else

D = 0

for (I = 31 .. 0)

if (S0[I] == S0[31])

D++

else break

This opcode behaves the same

as V_FFBH_I32.

|

S_BITSET0_{B32,B64} |

SOP1 |

n |

D[S0[4:0], [5:0] for B64] = 0 |

S_BITSET1_{B32,B64} |

SOP1 |

n |

D[S0[4:0], [5:0] for B64] = 1 |

S_{and,or,xor,andn2,orn2 ,nand, nor,xnor}_SAVEEXEC_B64 |

SOP1 |

y |

Save the EXEC mask, then apply

a bit-wise operation to it.

D = EXEC

EXEC = S0 <op> EXEC

SCC = (exec != 0)

|

S_{ANDN{1,2}_WREXEC_B6 4 |

SOP1 |

y |

N1: EXEC, D = ~S0 & EXEC

N2: EXEC, D = S0 & ~EXEC

Both D and EXEC get the same

result. SCC = (result != 0).

|

S_MOVRELS_{B32,B64}

S_MOVRELD_{B32,B64}

|

SOP1 |

n |

Move a value into an SGPR

relative to the value in M0.

MOVERELS: D = SGPR[S0+M0]

MOVERELD: SGPR[D+M0] = S0

Index must be even for 64. M0

is an unsigned index.

|

Table : Bit-Wise Instructions

Special Instructions¶

These instructions access hardware internal registers.

Instruction |

Encodin g |

Sets SCC? |

Operation |

|---|---|---|---|

S_GETREG_B32 |

SOPK* |

n |

Read a hardware register into the LSBs of D. |

S_SETREG_B32 |

SOPK* |

n |

Write the LSBs of D into a hardware register. (Note that D is a source SGPR.) Must add an S_NOP between two consecutive S_SETREG to the same register. |

S_SETREG_IMM32_B32 |

SOPK* |

n |

S_SETREG where 32-bit data comes from a literal constant (so this is a 64-bit instruction format). |

Table : Hardware Internal Registers

The hardware register is specified in the DEST field of the instruction,using the values in the table above. Some bits of the DEST specify which register to read/write, but additional bits specify which bits in the special register to read/write:

SIMM16 = {size[4:0], offset[4:0], hwRegId[5:0]}; offset is 0..31, size is 1..32.

Code |

Register |

Description |

|---|---|---|

0 |

reserved |

|

1 |

MODE |

R/W. |

2 |

STATUS |

Read only. |

3 |

TRAPSTS |

R/W. |

4 |

HW_ID |

Read only. Debug only. |

5 |

GPR_ALLOC |

Read only. {sgpr_size, sgpr_base, vgpr_size, vgpr_base }. |

6 |

LDS_ALLOC |

Read only. {lds_size, lds_base}. |

7 |

IB_STS |

Read only. {valu_cnt, lgkm_cnt, exp_cnt, vm_cnt}. |

8 - 15 |

reserved. |

|

16 |

TBA_LO |

Trap base address register [31:0]. |

17 |

TBA_HI |

Trap base address register [47:32]. |

18 |

TMA_LO |

Trap memory address register [31:0]. |

19 |

TMA_HI |

Trap memory address register [47:32]. |

Table : Hardware Register Values

Code |

Register |

Description |

|---|---|---|

VM_CNT |

23:22,

3:0

|

Number of VMEM instructions issued but not yet returned. |

EXP_CNT |

6:4 |

Number of Exports issued but have not yet read their data from VGPRs. |

LGKM_CNT |

11:8 |

LDS, GDS, Constant-memory and Message instructions issued-but-not-completed count. |

VALU_CNT |

14:12 |

Number of VALU instructions outstanding for this wavefront. |

Table : IB_STS

Code |

Register |

Description |

|---|---|---|

VGPR_BASE |

5:0 |

Physical address of first VGPR assigned to this wavefront, as [7:2] |

VGPR_SIZE |

13:8 |

Number of VGPRs assigned to this wavefront, as [7:2]. 0=4 VGPRs, 1=8 VGPRs, etc. |

SGPR_BASE |

21:16 |

Physical address of first SGPR assigned to this wavefront, as [7:3]. |

SGPR_SIZE |

27:24 |

Number of SGPRs assigned to this wave, as [7:3]. 0=8 SGPRs, 1=16 SGPRs, etc. |

Table : GPR_ALLOC

Code |

Register |

Description |

|---|---|---|

LDS_BASE |

7:0 |

Physical address of first LDS location assigned to this wavefront, in units of 64 Dwords. |

LDS_SIZE |

20:12 |

Amount of LDS space assigned to this wavefront, in units of 64 Dwords. |

Table : LDS_ALLOC

Vector ALU Operations¶

Vector ALU instructions (VALU) perform an arithmetic or logical operation on data for each of 64 threads and write results back to VGPRs, SGPRs or the EXEC mask.

Parameter interpolation is a mixed VALU and LDS instruction, and is described in the Data Share chapter.

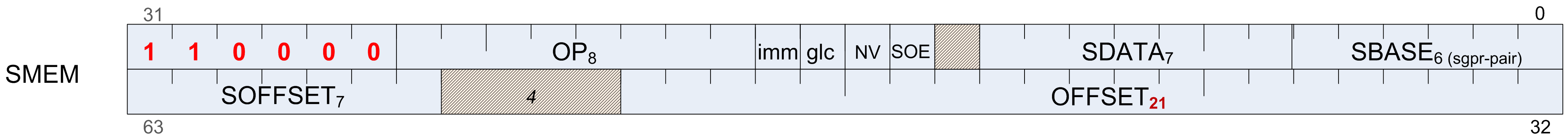

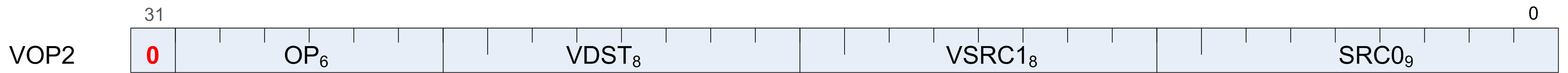

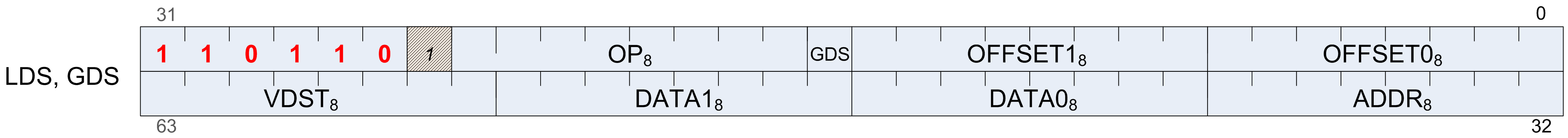

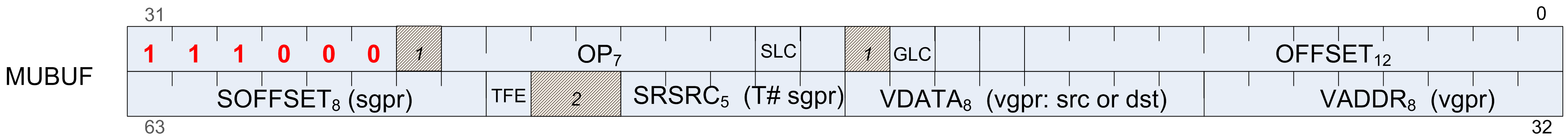

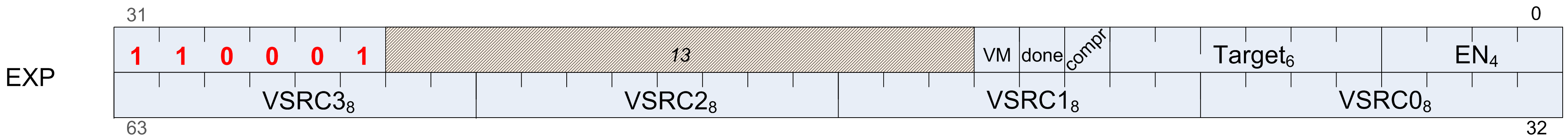

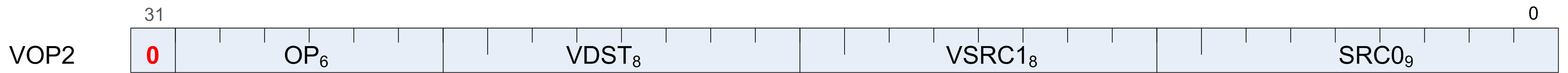

Microcode Encodings¶

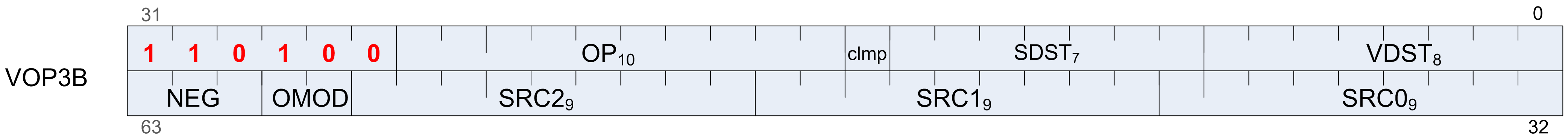

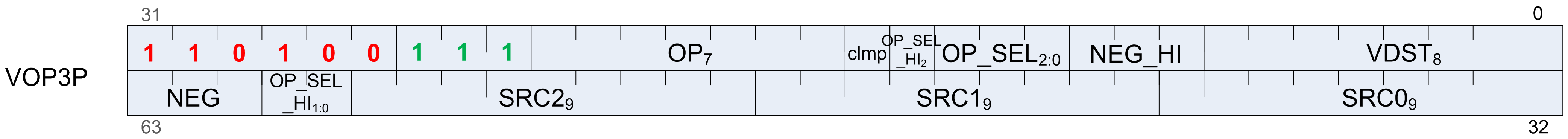

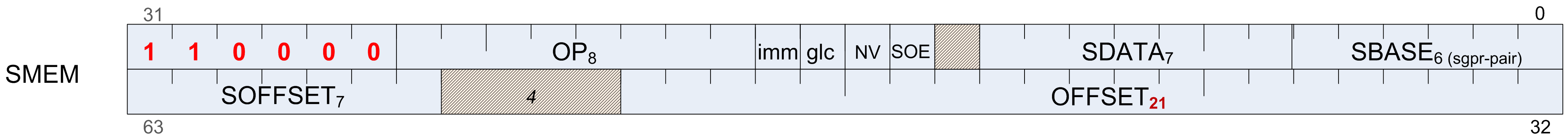

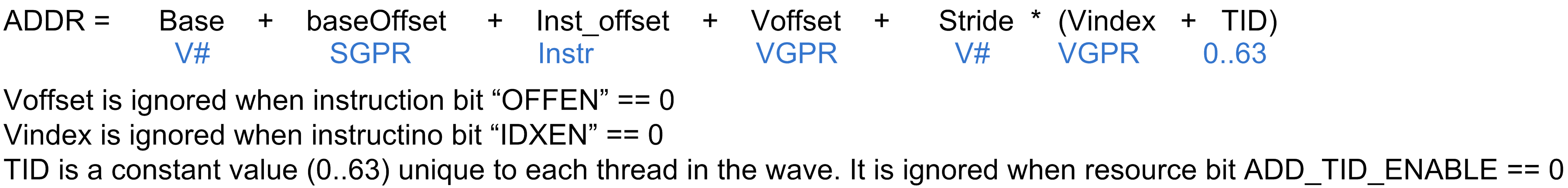

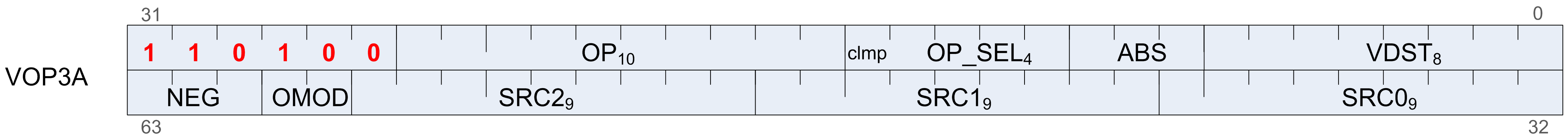

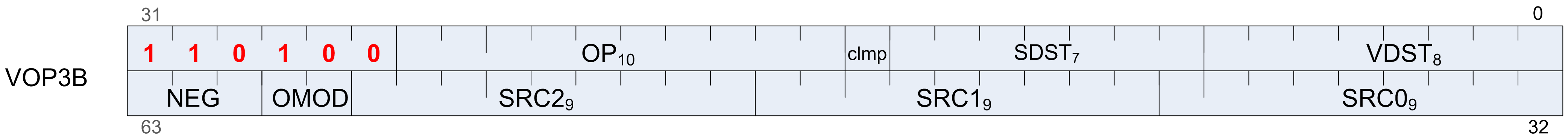

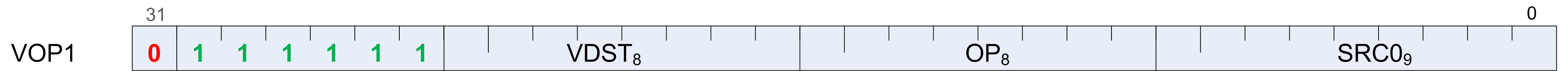

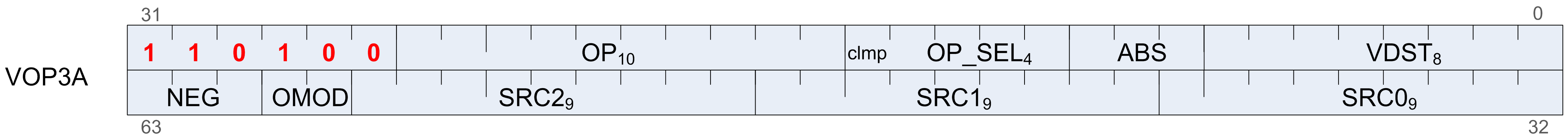

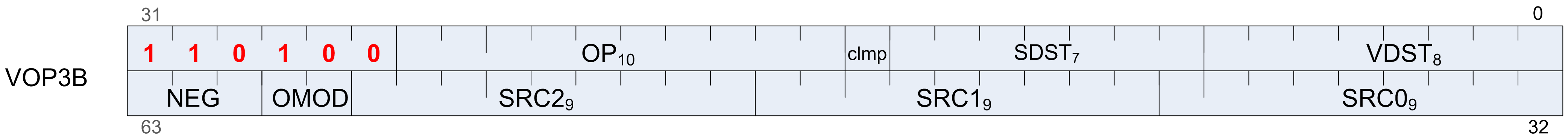

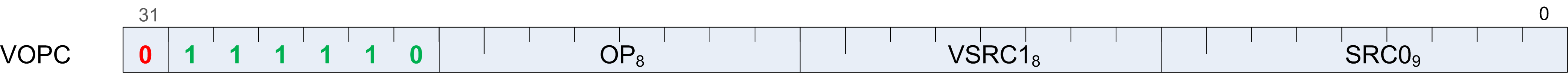

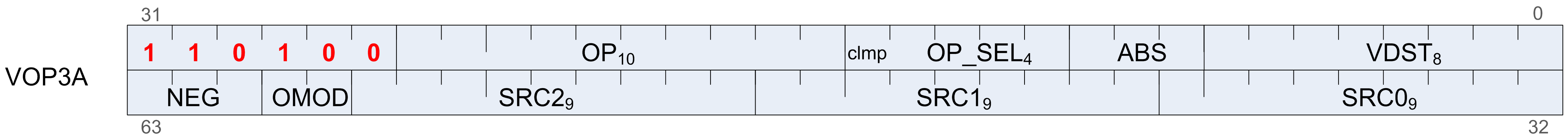

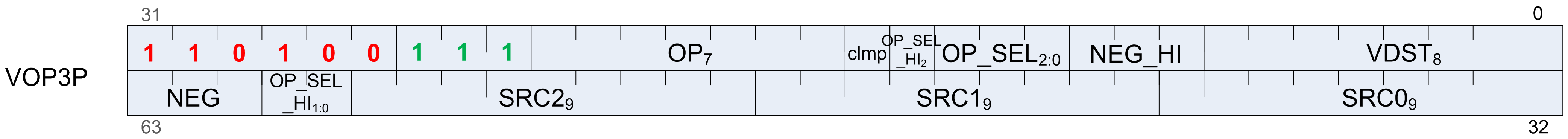

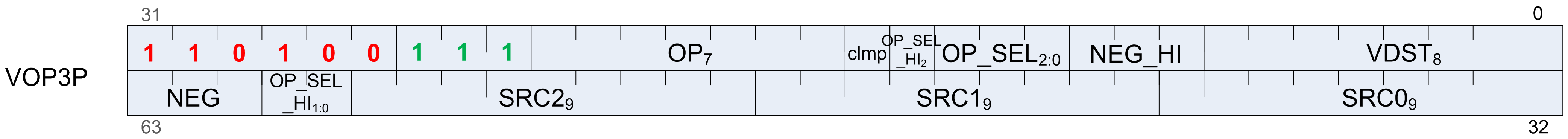

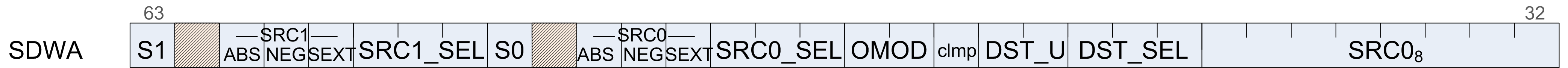

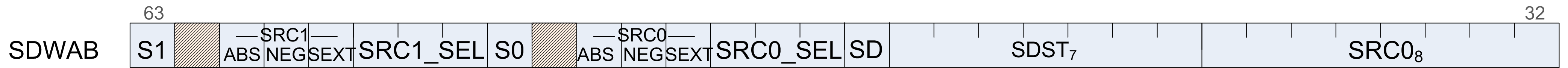

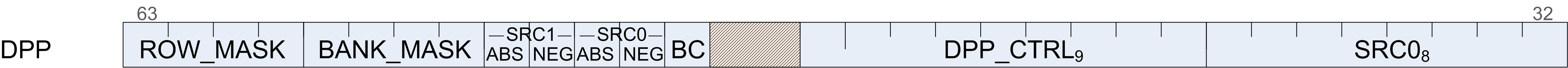

Most VALU instructions are available in two encodings: VOP3 which uses 64-bits of instruction and has the full range of capabilities, and one of three 32-bit encodings that offer a restricted set of capabilities. A few instructions are only available in the VOP3 encoding. The only instructions that cannot use the VOP3 format are the parameter interpolation instructions.

When an instruction is available in two microcode formats, it is up to the user to decide which to use. It is recommended to use the 32-bit encoding whenever possible.

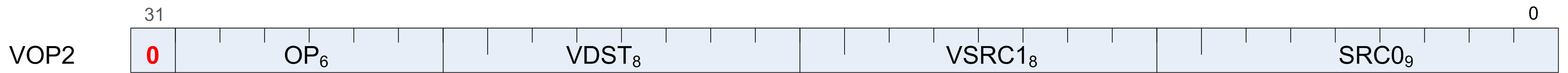

The microcode encodings are shown below.

VOP2 is for instructions with two inputs and a single vector destination. Instructions that have a carry-out implicitly write the carry-out to the VCC register.

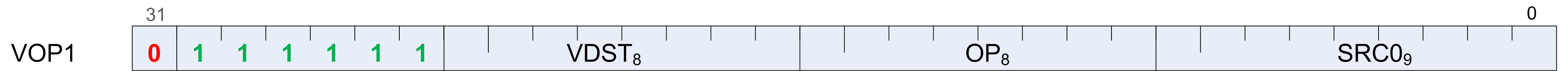

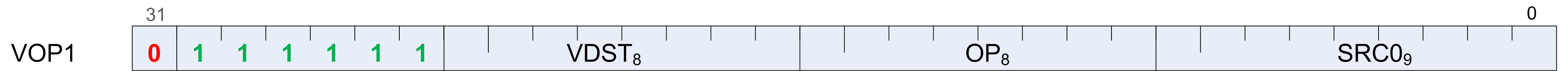

VOP1 is for instructions with no inputs or a single input and one destination.

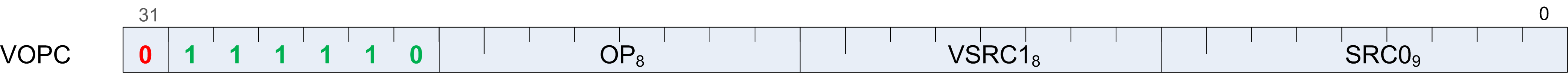

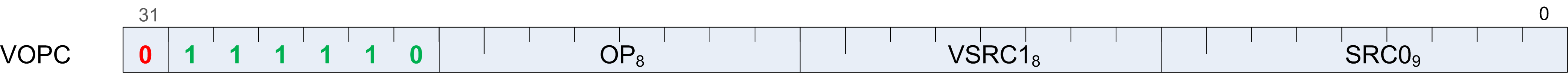

VOPC is for comparison instructions.

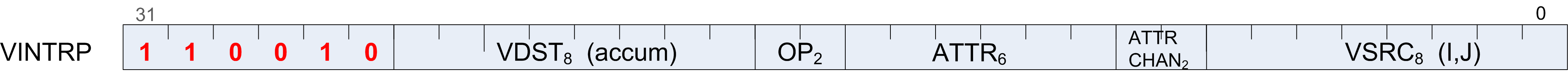

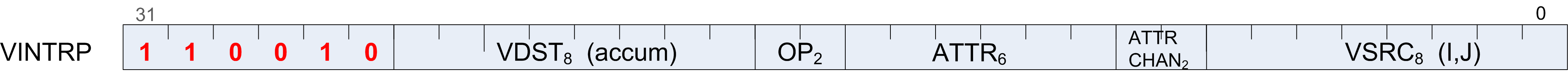

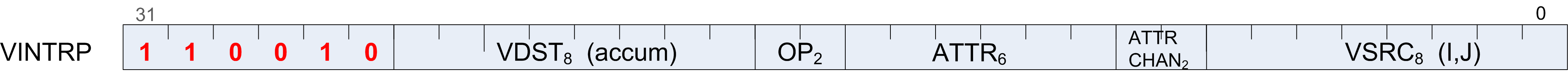

VINTRP is for parameter interpolation instructions.

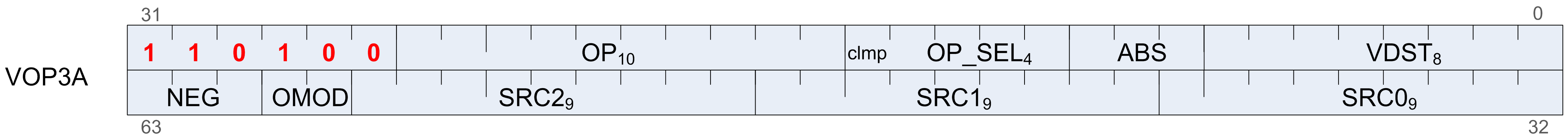

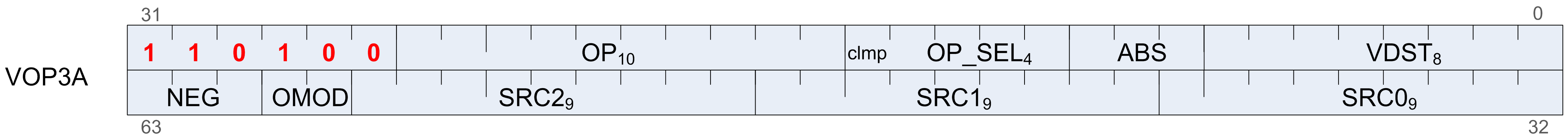

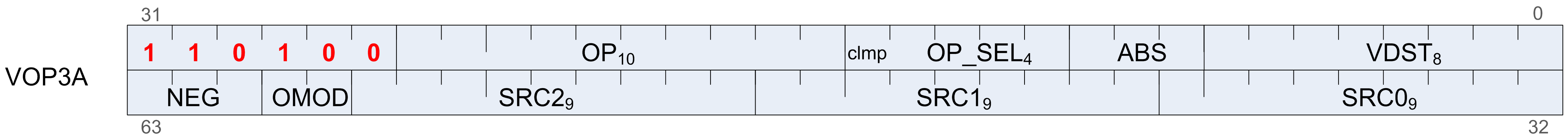

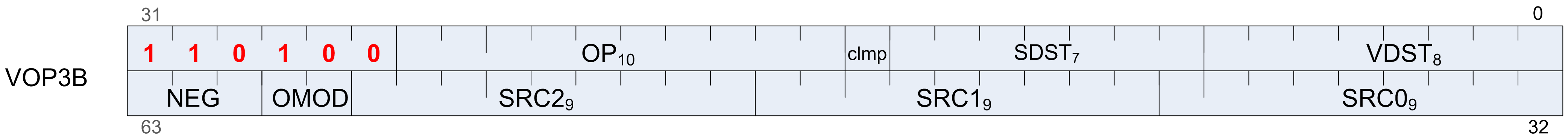

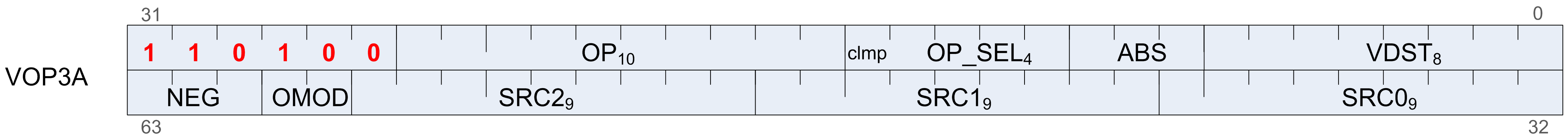

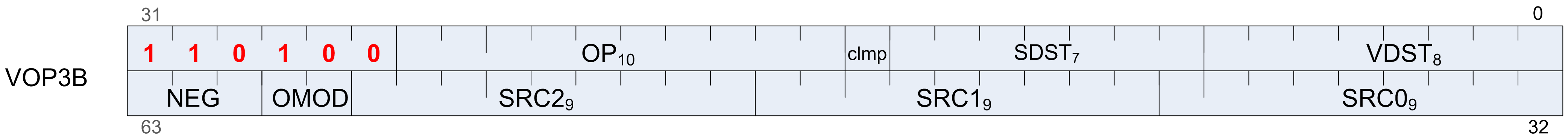

VOP3 is for instructions with up to three inputs, input modifiers (negate and absolute value), and output modifiers. There are two forms of VOP3: one which uses a scalar destination field (used only for div_scale, integer add and subtract); this is designated VOP3b. All other instructions use the common form, designated VOP3a.

Any of the 32-bit microcode formats may use a 32-bit literal constant, but not VOP3.

VOP3P is for instructions that use “packed math”: They perform the operation on a pair of input values that are packed into the high and low 16-bits of each operand; the two 16-bit results are written to a single VGPR as two packed values.

Operands¶

All VALU instructions take at least one input operand (except V_NOP and V_CLREXCP). The data-size of the operands is explicitly defined in the name of the instruction. For example, V_MAD_F32 operates on 32-bit floating point data.

Instruction Inputs¶

VALU instructions can use any of the following sources for input, subject to restrictions listed below:

VGPRs.

SGPRs.

Inline constants - constant selected by a specific VSRC value.

Literal constant - 32-bit value in the instruction stream. When a literal constant is used with a 64bit instruction, the literal is expanded to 64 bits by: padding the LSBs with zeros for floats, padding the MSBs with zeros for unsigned ints, and by sign-extending signed ints.

LDS direct data read.

M0.

EXEC mask.

Limitations

At most one SGPR can be read per instruction, but the value can be used for more than one operand.

At most one literal constant can be used, and only when an SGPR or M0 is not used as a source.

Only SRC0 can use LDS_DIRECT (see Chapter 10, “Data Share Operations”).

- Special Cases for Constants

- VALU “ADDC”, “SUBB” and CNDMASK all implicitly use anSGPR value (VCC), so these instructions cannot use an additional SGPR or literal constant.

Instructions using the VOP3 form and also using floating-point inputs have the option of applying absolute value (ABS field) or negate (NEG field) to any of the input operands.

Literal Expansion to 64 bits¶

Literal constants are 32-bits, but they can be used as sources which normally require 64-bit data:

64 bit float: the lower 32-bit are padded with zero.

64-bit unsigned integer: zero extended to 64 bits

64-bit signed integer: sign extended to 64 bits

Instruction Outputs¶

VALU instructions typically write their results to VGPRs specified in the VDST field of the microcode word. A thread only writes a result if the associated bit in the EXEC mask is set to 1.

All V_CMPX instructions write the result of their comparison (one bit per thread) to both an SGPR (or VCC) and the EXEC mask.

Instructions producing a carry-out (integer add and subtract) write their result to VCC when used in the VOP2 form, and to an arbitrary SGPR-pair when used in the VOP3 form.

When the VOP3 form is used, instructions with a floating-point result can apply an output modifier (OMOD field) that multiplies the result by: 0.5, 1.0, 2.0 or 4.0. Optionally, the result can be clamped (CLAMP field) to the range [0.0, +1.0].

In the table below, all codes can be used when the vector source is nine bits; codes 0 to 255 can be the scalar source if it is eight bits; codes 0 to 127 can be the scalar source if it is seven bits; and codes 256 to 511 can be the vector source or destination.

Field |

Bit Position |

Description |

|---|---|---|

0-101 |

SGPR |

0 .. 101 |

102 |

FLATSCR_LO |

Flat Scratch[31:0]. |

103 |

FLATSCR_HI |

Flat Scratch[63:32]. |

104 |

XNACK_MASK_LO |

|

105 |

XNACK_MASK_HI |

|

106 |

VCC_LO |

vcc[31:0]. |

107 |

VCC_HI |

vcc[63:32]. |

108-123 |

TTMP0 to TTMP 15 |

Trap handler temps (privileged). |

124 |

M0 |

|

125 |

reserved |

|

126 |

EXEC_LO |

exec[31:0]. |

127 |

EXEC_HI |

exec[63:32]. |

128 |

0 |

|

129-192 |

int 1.. 64 |

Integer inline constants. |

193-208 |

int -1 .. -16 |

|

209-234 |

reserved |

Unused. |

235 |

SHARED_BASE |

Memory Aperture definition. |

236 |

SHARED_LIMIT |

|

237 |

PRIVATE_BASE |

|

238 |

PRIVATE_LIMIT |

|

239 |

POPS_EXITING_WAV E_ID |

Primitive Ordered Pixel Shading wave ID. |

240 |

0.5 |

Single, double, or half-precision inline

floats.

1/(2*PI) is 0.15915494.

The exact value used is:

half: 0x3118

single: 0x3e22f983

double: 0x3fc45f306dc9c882

|

241 |

-0.5 |

|

242 |

1.0 |

|

243 |

-1.0 |

|

244 |

2.0 |

|

245 |

-2.0 |

|

246 |

4.0 |

|

247 |

-4.0 |

|

248 |

1/(2*PI) |

|

249 |

SDWA |

250 |

DPP |

251 |

VCCZ |

{ zeros, VCCZ } |

252 |

EXECZ |

{ zeros, EXECZ } |

253 |

SCC |

{ zeros, SCC } |

254 |

LDS direct |

Use LDS direct read to supply 32-bit value Vector-al u instructi ons only. |

255 |

Literal |

constant 32-bit constant from instructi on stream. |

256-511 |

VGPR |

Table: Instruction Operands

Out-of-Range GPRs¶

When a source VGPR is out-of-range, the instruction uses as input the value from VGPR0.

When the destination GPR is out-of-range, the instruction executes but does not write the results.

Instructions¶

The table below lists the complete VALU instruction set by microcode encoding.

VOP3 |

VOP3 - 1-2 operand opcodes |

VOP2 |

VOP1 |

|---|---|---|---|

V_MAD_LEGACY _F32 |

V_ADD_F64 |

V_ADD_{ F16,F32, U16,U32} |

V_NOP |

V_MAD_{ F16,I16,U16,F3 2} |

V_MUL_F64 |

V_SUB_{ F16,F32,U16, U32} |

V_MOV_B32 |

V_MAD_LEGACY _{F16,U16,I16 } |

V_MIN_F64 |

V_SUBREV_{ F16,F32, U16,U32} |

|

V_MAD_I32_I 24 |

V_MAX_F64 |

V_ADD_CO_U3 2 |

V_READFIRSTLANE_B32 |

V_MAD_U32_U 24 |

V_LDEXP_F64 |

V_SUB_CO_U3 2 |

V_CVT_F32_{I32,U32,F16,F6 4 } |

V_CUBEID_F32 |

V_MUL_LO_U3 2 |

V_SUBREV_CO_U32 |

V_CVT_{I32,U32,F16, F64}_F32 |

V_CUBESC_F32 |

V_MUL_HI_{I 32,U32} |

V_ADDC_U32 |

V_CVT_{I32,U32}_F64 |

V_CUBETC_F32 |

V_LSHLREV_B6 4 |

V_SUBB_U32 |

V_CVT_F64_{I32,U32} |

V_CUBEMA_F32 |

V_LSHRREV_B6 4 |

V_SUBBREV_U3 2 |

V_CVT_F32_UBYTE{0,1,2,3} |

V_BFE_{U32 , I32 } |

V_ASHRREV_I6 4 |

V_MUL_LEGACY _F32 |

V_CVT_F16_{U16, I16} |

V_FMA_{ F16, F32 , F64} |

V_LDEXP_F32 |

V_MUL_{F16, F32} |

V_CVT_RPI_I32_F32 |

V_FMA_LEGACY _F16 |

V_READLANE_B 32 |

V_MUL_I32_I 24 |

V_CVT_FLR_I32_F32 |

V_BFI_B32 |

V_WRITELANE_ B32 |

V_MUL_HI_I3 2_I24 |

V_CVT_OFF_F32_I4 |

V_LERP_U8 |

V_BCNT_U32_ B32 |

V_MUL_U32_U 24 |

V_FRACT_{ F16,F32,F64} |

V_ALIGNBIT_B 32 |

V_MBCNT_LO_ U32_B32 |

V_MUL_HI_U3 2_U24 |

V_TRUNC_{ F16,F32, F64} |

V_ALIGNBYTE_ B32 |

V_MBCNT_HI_ U32_B32 |

V_MIN_{ F16,U16, I16,F32,I32,U3 2} |

V_CEIL_{ F16,F32, F64} |

V_MIN3_{F32, I32,U32} |

V_CVT_PKACCU M_U8_F32 |

V_MAX_{ F16,U16, I16,F32,I32,U3 2} |

V_RNDNE_{ F16,F32, F64} |

V_MAX3_{F32, I32,U32} |

V_CVT_PKNORM _I16_F32 |

V_LSHRREV_{ B16,B32} |

V_FLOOR_{ F16,F32, F64} |

V_MED3_{F32, I32,U32} |

V_CVT_PKNORM _U16_F32 |

V_ASHRREV_{I 16,I32} |

V_EXP_{ F16,F32} |

V_SAD_{U8, HI_U8, U16, U32} |

V_CVT_PKRTZ_F16_F32 |

V_LSHLREV_{ B16,B32} |

V_LOG_ {F16,F32} |

V_CVT_PK_U8 _F32 |

V_CVT_PK_U1 6_U32 |

V_AND_B32 |

V_RCP_{ F16,F32,F64} |

V_DIV_FIXUP_{ F16,F32,F64} |

V_CVT_PK_I1 6_I32 |

V_OR_B32 |

V_RCP_IFLAG_F32 |

V_DIV_FIXUP_LEGACY_F16 |

V_MAC_LEGACY _F32 |

V_XOR_B32 |

V_RSQ_{ F16,F32, F64} |

V_DIV_SCALE_{F32,F64} |

V_BFM_B32 |

V_MAC_{ F16,F32} |

V_SQRT_{ F16,F32,F64} |

V_DIV_FMAS_ {F32,F64} |

V_INTERP_P1_F32 |

V_MADMK_{ F16,F32} |

V_SIN_ {F16,F32} |

V_MSAD_U8 |

V_INTERP_P2_F32 |

V_MADAK_{ F16,F32} |

V_COS_ {F16,F32} |

V_QSAD_PK_U 16_U8 |

V_INTERP_MOV _F32 |

V_CNDMASK_B3 2 |

V_NOT_B32 |

V_MQSAD_PK_ U16_U8 |

V_INTERP_P1L L_F16 |

V_LDEXP_F16 |

V_BFREV_B32 |

V_MQSAD_PK_ U32_U8 |

V_INTERP_P1L V_F16 |

MUL_LO_U16 |

V_FFBH_{U32, I32} |

V_TRIG_PREOP _F64 |

V_INTERP_P2_F16 |

V_FFBL_B32 |

|

V_MAD_{U64_ U32, I64_I32} |

V_INTERP_P2_LEGACY_F16 |

V_FREXP_EXP_I32_F64 |

|

V_CVT_PKNORM _I16_F16 |

V_FREXP_MANT_{ F16,F32,64} |

||

V_CVT_PKNORM _U16_F16 |

V_FREXP_EXP_I32_F32 |

||

V_MAD_U32_U 16 |

V_FREXP_EXP_I16_F16 |

||

V_MAD_I32_I 16 |

V_CLREXCP |

||

V_XAD_U32 |

V_MOV_FED_B32 |

||

V_MIN3_{F16, I16,U16} |

V_CVT_NORM_I16_F16 |

||

V_MAX3_{F16, I16,U16} |

V_CVT_NORM_U16_F16 |

||

V_MED3_{F16, I16,U16} |

V_SAT_PK_U8_I16 |

||

V_CVT_PKNORM _{I16_F16, U16_F16} |

V_WRITELANE_REGWR |

||

V_READLANE_R EGRD_B32 |

V_SWAP_B32 |

||

V_PACK_B32_ F16 |

V_SCREEN_PARTITION_4SE_B 32 |

Table: VALU Instruction Set

Op |

Formats |

Functions |

Result |

|---|---|---|---|

V_CMP |

I16, I32, I64, U16, U32, U64 |

F, LT, EQ, LE, GT, LG, GE, T |

Write VCC.. |

V_CMPX |

Write VCC and exec. |

||

V_CMP |

F16, F32, F64 |

F, LT, EQ,LE, GT, LG, GE,

T, GE, T,

O, U, NGE,

NLG, NGT,

NLE, NEQ,

NLT

(o = total order, u =

unordered,

N = NaN or

normal

compare)

|

Write VCC. |

V_CMPX |

Write VCC and exec. |

||

V_CMP_CLASS |

F16, F32, F64 |

Test for one of: signaling

-NaN quiet-NaN,

|

Write VCC. |

V_CMPX_CLASS |

Write VCC and exec. |

Table: VALU Instruction Set

Denormalized and Rounding Modes¶

The shader program has explicit control over the rounding mode applied and the handling of denormalized inputs and results. The MODE register is set using the S_SETREG instruction; it has separate bits for controlling the behavior of single and double-precision floating-point numbers.

Field |

Bit Position |

Description |

|---|---|---|

FP_ROUND |

3:0 |

[1:0] Single-precision round mode.

[3:2] Double-precision round mode.

Round Modes: 0=nearest even; 1= +infinity; 2=

-infinity, 3= toward zero.

|

FP_DENORM |

7:4 |

[5:4] Single-precision denormal mode.

[7:6] Double-precision denormal mode.

Denormal modes:

0 = Flush input and output denorms.

1 = Allow input denorms, flush output denorms.

2 = Flush input denorms, allow output denorms.

3 = Allow input and output denorms.

|

Table: Round and Denormal Modes

ALU Clamp Bit Usage¶

In GCN Vega Generation, the meaning of the “Clamp” bit in the VALU instructions has changed. For V_CMP instructions, setting the clamp bit to 1 indicates that the compare signals if a floating point exception occurs. For integer operations, it clamps the result to the largest and smallest representable value. For floating point operations, it clamps the result to the range: [0.0, 1.0].

VGPR Indexing¶

VGPR Indexing allows a value stored in the M0 register to act as an index into the VGPRs either for the source or destination registers in VALU instructions.

Indexing Instructions¶

The table below describes the instructions which enable, disable and control VGPR indexing.

Instruction |

Encoding |

Sets SCC? |

Operation |

|---|---|---|---|

S_SET_GPR_IDX_ OFF |